Why Clawdbot Changed Its Name to Moltbot

Clawdbot was forced to rebrand as Moltbot after Anthropic's trademark notice, and crypto scammers exploited its viral success. Learn what happened.

The open-source AI community witnessed a dramatic turn of events this week when Clawdbot, one of the fastest-growing GitHub projects with over 70,000 stars, was forced to rebrand as Moltbot. What started as an innovative personal AI assistant quickly became caught in a perfect storm involving corporate legal departments and cryptocurrency opportunists.

What Is Clawdbot (Now Moltbot)?

Clawdbot was a self-hosted AI assistant created by Peter Steinberger, the Austrian developer who founded PSPDFKit. The project distinguished itself by offering users unprecedented control over their AI experience:

- Full System Integration: Shell access, browser control, and file management capabilities

- Persistent Memory: Maintains context across conversations

- Multi-Platform Support: Works with WhatsApp, Telegram, Slack, iMessage, Signal, and Discord

- 50+ Integrations: Connects with various productivity tools and services

- Local Deployment: Runs entirely on personal hardware, ensuring privacy

The project's rapid ascent was remarkable. It gained 9,000 stars within 24 hours and crossed 70,000 stars in just days, making it one of the fastest-growing open-source projects in GitHub history. Tech luminaries including Andrej Karpathy praised the innovation, and MacStories called it "the future of personal AI assistants."

The Trademark Battle: Why the Name Had to Change

Anthropic's Legal Notice

The name "Clawdbot" was initially a playful homage to Claude, Anthropic's flagship AI model. When naming the project, Steinberger drew inspiration from "Claude," which became "Clawd," and the claw was expanded in the logo to feature a crustacean mascot.

However, what seemed like harmless wordplay to the developer represented a potential trademark issue for Anthropic. Anthropic reached out about name and mascot similarities, putting the developer in an impossible position.

For independent developers, fighting a legal battle against a well-funded AI company is financially devastating:

- Legal Fees: Attorney costs can drain savings rapidly

- Time Drain: Development stops while dealing with legal proceedings

- Uncertainty: No guarantee of winning, even with a valid case

In an episode of the "Insecure Agents" podcast published three days before the renaming, Steinberger said he believed the "Clawdbot" name was legally viable, stating "I looked it up. There's no trademark for this." Yet the legal pressure left him with no realistic choice but to comply.

The Cryptocurrency Complication

How Meme Coin Scammers Hijacked the Project

The rename wasn't solely about trademark concerns. The situation was complicated by cryptocurrency opportunists who saw the project's viral success as a chance to exploit unsuspecting investors.

Within hours of the rename chaos, fake $CLAWD tokens appeared on Solana, with the token hitting a $16 million market cap at peak as speculators rushed in. These meme coins had nothing to do with the actual Clawdbot project but leveraged the name recognition for quick profits.

The developer was forced to issue public statements. Steinberger posted: "To all crypto folks: Please stop pinging me, stop harassing me. I will never do a coin. Any project that lists me as coin owner is a SCAM."

The consequences were predictable and devastating. The token immediately collapsed to near-zero after the statement, with late buyers getting rugged while scammers walked away with millions.

Account Hijacking Attempts

The chaos extended beyond cryptocurrency scams. Steinberger's personal GitHub account was briefly taken over by "crypto scammers," though Moltbot's official account remained unaffected. This incident highlighted how vulnerable independent developers are when their projects gain sudden visibility.

What the Rebrand Means for Users

Technical Continuity

Despite the dramatic name change, the technical functionality remains identical. Users who installed Clawdbot can continue using their existing setups, and the clawdbot command still works as a compatibility layer.

Brand Identity Challenges

The new name "Moltbot" carries symbolic weight. "Molt fits perfectly - it's what lobsters do to grow," the project's statement explained. While this represents resilience and evolution, the rebrand creates practical challenges:

- SEO Impact: Years of accumulated search rankings under "Clawdbot" must be rebuilt

- Brand Recognition: The original name had significant momentum in tech communities

- Documentation Confusion: Older tutorials and articles still reference the old name

- Trust Issues: Some users may wonder if the project is stable long-term

Lessons for the Open-Source Community

The Reality of Building on Corporate Platforms

This incident exposes harsh truths about the modern development landscape:

- Name Defensively: Avoid any similarity to established brands, even as homage

- Expect the Unexpected: Viral success can attract unwanted attention from multiple directions

- Legal Vulnerability: Small developers have virtually no leverage against corporate legal teams

- Crypto Parasites: Any successful project risks becoming a vehicle for pump-and-dump schemes

The Fragility of Open-Source Innovation

The saga highlights the fragility of the current AI ecosystem, where builders work on corporate platforms with ambiguous trademark policies, and one legal notice can force a rebrand that exposes developers to account hijacking, scams, and chaos.

The irony isn't lost on the community. These independent developers are often Anthropic's most enthusiastic evangelists, building experimental tools that drive API usage. Some questioned the wisdom of this approach, with one engineer asking Anthropic CEO Dario Amodei directly: "Do you hate success?"

Security Concerns Emerge Amid the Chaos

Authentication Vulnerabilities Discovered

While the naming drama unfolded, security researchers identified serious vulnerabilities in the platform. Security firm SlowMist announced that an authentication bypass in the gateway system made several hundred API keys and private conversation histories publicly accessible.

The technical issues stem from the system's architecture. The system automatically approves localhost connections without authentication, which proves problematic when the software runs behind a reverse proxy on the same server.

The Power-Risk Tradeoff

The issue reveals fundamental tensions in the architecture of autonomous AI systems: to be useful, such agents must read messages, store credentials, execute commands, and maintain persistent states—requirements that inevitably violate established security models.

This explains why Steinberger describes running Moltbot on a primary machine as "spicy." Most experienced users deploy it in isolated environments or dedicated hardware like Mac Minis to contain potential security breaches.

The Bigger Picture: Corporate Control vs. Open Innovation

Comparing Approaches to Ecosystem Development

The handling of this situation raises questions about how AI companies nurture their developer ecosystems. The comparison is stark: Google didn't sue Android developers, and OpenAI isn't suing LangChain builders who leverage their platforms.

There's an established playbook for fostering innovation:

- Encourage Experimentation: Welcome creative uses of your technology

- Clear Guidelines: Publish explicit policies about naming and branding

- Community Engagement: Build relationships with prominent developers

- Proportional Response: Reserve legal action for actual harm or confusion

What This Means for AI Development

The incident sends a chilling message to independent developers: viral success can become a liability rather than an achievement. The unspoken rules are now clearer:

- Don't Reference Major Brands: Even tangentially or as tribute

- Prepare for Exploitation: Success attracts parasites seeking quick profits

- Guard Your Identity: Personal accounts become targets during viral moments

- Maintain Low Profiles: Sometimes obscurity is safer than recognition

Moving Forward: The Path Ahead for Moltbot

Despite the turbulence, Moltbot remains a remarkable achievement in AI agent technology. The project continues active development, with the GitHub repository buzzing with contributions—including "Vibe Coding" pull requests largely written by AI itself.

The platform still offers compelling advantages:

- Privacy First: Complete data control through self-hosting

- Unmatched Flexibility: Integration with virtually any communication platform

- Active Community: Thousands of developers contributing improvements

- Real Autonomy: Genuine task execution, not just conversational responses

For users willing to navigate the technical requirements and security considerations, Moltbot represents a glimpse into the future of personal AI—agents that truly operate as digital employees rather than mere chatbots.

Supercharge Your Moltbot with BrowserAct Integration

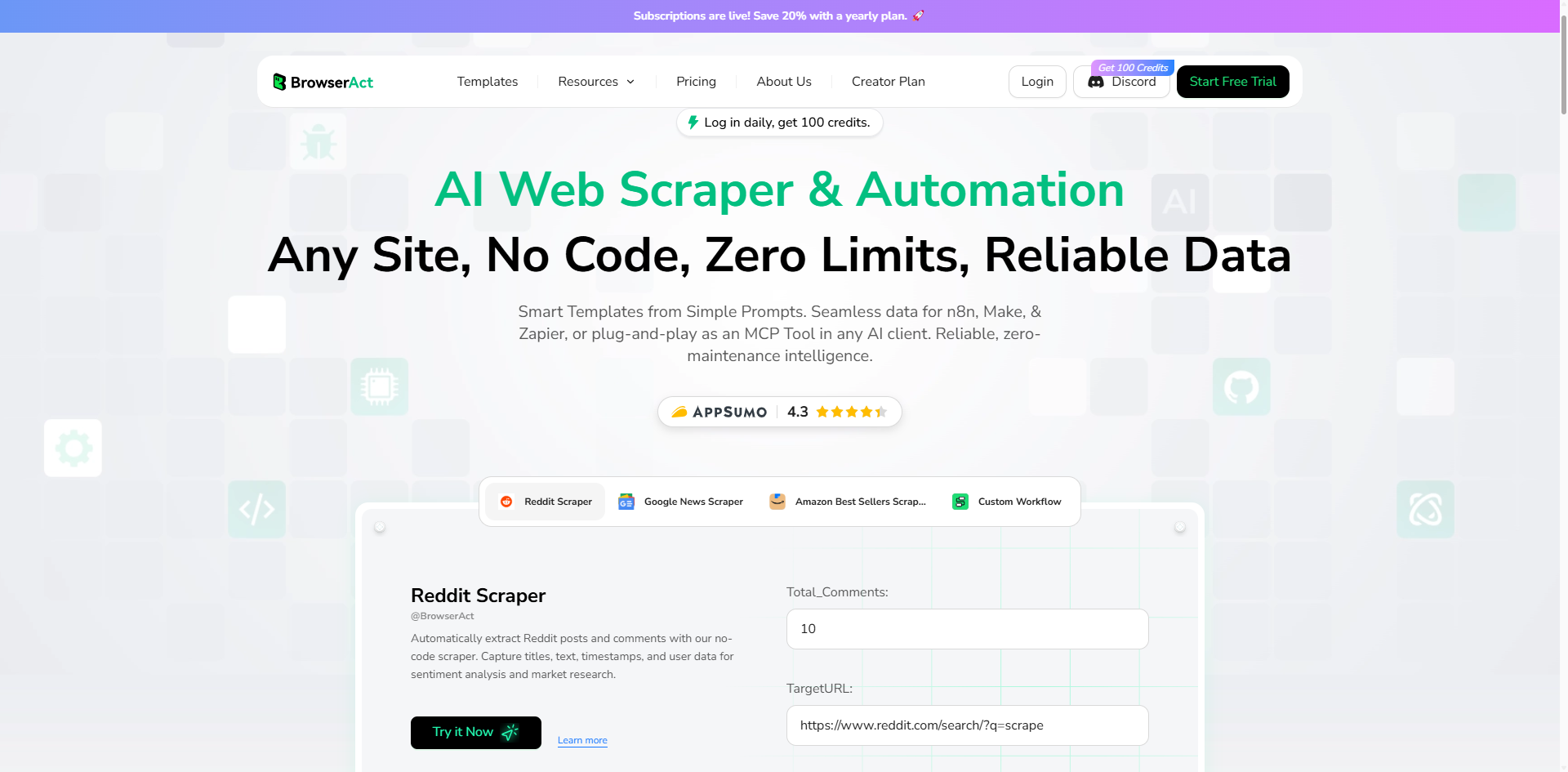

While Moltbot excels at local task automation, pairing it with specialized tools amplifies its capabilities exponentially. BrowserAct is a browser automation and AI web scraping platform that addresses one of the most common limitations in AI agent workflows: reliable web data extraction.

Why BrowserAct Complements Moltbot Perfectly

BrowserAct brings enterprise-grade web scraping capabilities that integrate seamlessly with Moltbot's agentic architecture:

Advanced Anti-Detection Technology

- CAPTCHA Bypass: Automatically handles human verification challenges that would stop standard scrapers

- Global IP Rotation: Access geo-restricted content from any region

- Fingerprint Browser: Mimics authentic human browsing patterns to avoid detection

- Real User Simulation: Behavioral patterns that fool sophisticated anti-bot systems

No-Code Design with API Flexibility Unlike traditional scraping tools requiring extensive programming knowledge, BrowserAct offers an intuitive interface while maintaining full API access. This means Moltbot can programmatically trigger scraping tasks without manual intervention.

Perfect for Agentic Workflows When Moltbot needs to gather market research, monitor competitor pricing, aggregate news from multiple sources, or extract data for analysis, it can call BrowserAct's API to handle the heavy lifting. The combination creates a truly autonomous system where:

- Moltbot identifies data needs based on your conversations or scheduled tasks

- BrowserAct executes the scraping with bulletproof reliability

- Moltbot processes and presents the extracted information

- You receive actionable insights without touching a browser

Real-World Use Cases

- Competitive Intelligence: Set Moltbot to monitor competitor websites daily, with BrowserAct extracting pricing, product updates, and marketing copy—all delivered to your Slack each morning.

- Lead Generation: Combine Moltbot's communication capabilities with BrowserAct's extraction power to build prospect lists from LinkedIn, industry directories, or company websites.

- Content Aggregation: Have Moltbot compile weekly digests from dozens of sources, using BrowserAct to scrape full article text even from paywall-protected sites.

- Market Research: Deploy Moltbot to analyze trends by scraping review sites, forums, and social platforms via BrowserAct, then synthesizing insights using Claude's analysis capabilities.

Ready to unlock the full potential of agentic AI? Explore BrowserAct's capabilities and discover how it makes Moltbot an even more formidable automation powerhouse.

Start your free trial today and see what true AI autonomy looks like—no credit card required.

Relative Resources

Everyone Is Talking About Clawdbot (Moltbot). Here’s What’s Real

Why Clawdbot Wins: Gateway Architecture, Permissions, Risks

Clawdbot (Moltbot): What Siri Was Supposed to Be

ClawdBot: The Open-Source Personal AI Agent That's Taking Silicon Valley by Storm

Latest Resources

Is ClawdBot Safe? Security Risks Every CEO Must Know in 2026

Clawdbot Guide: Build a Secure Self-Hosted AI Control Plane

Clawdbot Viral: How to Deploy 24/7 AI Powerhouse in One-Click