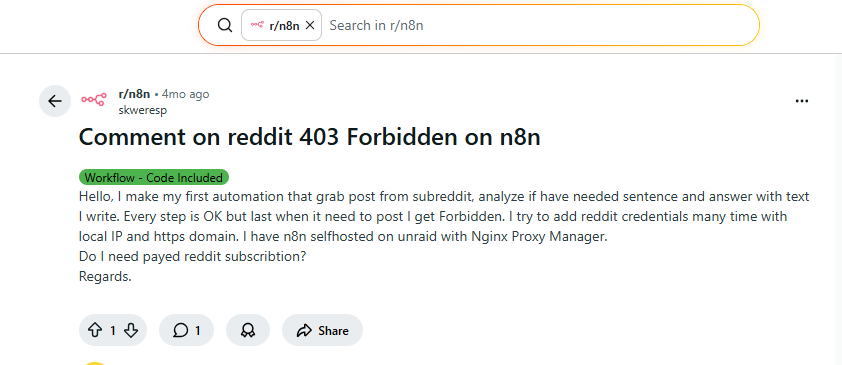

How to Fix Reddit 403 Forbidden Error in n8n [2026 Guide]

Getting 403 forbidden when scraping Reddit with n8n? BrowserAct uses fingerprint browsers to bypass bot detection with 95%+ success.—No coding required.

You're building a powerful Reddit automation workflow in n8n. Maybe you're monitoring multiple subreddits for competitor mentions, scraping trending posts for market research, tracking brand sentiment across thousands of comments, or extracting lead data from niche communities. You've spent time carefully configuring everything—Reddit API credentials, OAuth 2.0 tokens, proper scopes, the HTTP Request node with all the right headers. Your workflow looks perfect on paper.

You hit execute. Then it happens: 403 Forbidden.

Not once. Not twice. Every single time you try to scrape data at scale, Reddit's API blocks you.

If you're reading this, you've probably spent frustrating hours:

- Reconfiguring Reddit API credentials multiple times, trying every combination

- Switching between Script app and Web App types, hoping one will work

- Testing with different IP addresses—local, HTTPS domains, even VPNs

- Reading through Reddit's API documentation trying to understand what you're doing "wrong"

- Wrestling with self-hosted n8n on Unraid with Nginx Proxy Manager configurations

- Wondering: "Do I need to pay for Reddit's premium API access? Is my account flagged?"

The frustration is real. Your credentials are valid. Your OAuth setup passes all tests. The workflow logic is sound. Yet Reddit keeps rejecting your requests with that cryptic 403 error. You can manually browse Reddit just fine, but the moment you try to automate data extraction—even simple GET requests to read public posts—you hit a wall.

Here's the truth that most developers discover too late: the problem isn't your credentials, your OAuth configuration, or Reddit's API pricing tiers. It's that traditional API-based scraping methods are fundamentally incompatible with Reddit's 2026 anti-bot detection systems. No amount of credential tweaking will fix a detection problem.

What is a 403 Forbidden Error?

The 403 forbidden meaning is straightforward: the server understood your request but is refusing to authorize it. It's the digital equivalent of "I know who you are, but you can't do this."

This is crucially different from other HTTP errors:

- 401 Unauthorized: "I don't know who you are—please log in"

- 403 Forbidden: "I know who you are, but you're not allowed to do this"

- 404 Not Found: "This resource doesn't exist"

- 429 Too Many Requests: "Slow down, you're making too many requests"

When you encounter http error 403: forbidden, it's a server-side decision, not a client-side mistake. The server has actively chosen to block your request.

Common Causes of 403 Forbidden

- Insufficient permissions – Your credentials don't have the required scope

- IP address blocking – The server has blacklisted your IP range

- Bot detection systems – Automated behavior flagged (the main culprit for Reddit)

- Geographic restrictions – Content unavailable in your region

- Authentication token issues – Expired or invalid OAuth tokens

Important: In Reddit's case, 403 errors during automation are almost always due to bot detection—not permissions. Your credentials might be valid, but Reddit's systems have identified your traffic as automated.

Why Reddit Blocks Scraping in 2026

The Bot Detection Problem

Reddit uses sophisticated anti-bot systems specifically designed to detect and block automated scraping and data extraction. Even when you're just trying to read posts and comments (not post anything), Reddit's systems can identify your n8n workflow as a bot.

Detection Mechanisms:

- Request patterns: API calls have predictable timing and structure

- User-Agent analysis: Generic agents like "Python/urllib" are instantly flagged

- IP reputation: Data center IPs (like your Unraid server) are heavily scrutinized

- Browser fingerprints: Missing JavaScript execution, WebGL, Canvas APIs

- Behavioral signals: No mouse movement, scroll patterns, or human-like variance

Reddit severely restricts access from server IPs, applying stricter rate limits (as low as 10 requests per minute) and more aggressive bot detection compared to residential connections.

From Reddit's official documentation: "Reddit can and will freely throttle or block unidentified Data API users."

Why "n8n 403 Forbidden" Happens Even With Valid Credentials

This is the paradox that confuses developers:

- ✅ Your OAuth credentials are technically valid

- ✅ Reddit's API documentation says you should be able to read data

- ❌ GET requests for scraping posts/comments still trigger reddit 403 blocked

The Real Problem:

Even though you're only making GET requests (reading data, not writing), n8n's HTTP Request nodes send calls that Reddit's systems recognize as automated scraping:

- Missing browser-specific headers (Sec-CH-UA, Sec-Fetch-Dest)

- No cookies from genuine browsing sessions

- Absent JavaScript fingerprints (WebGL, Canvas, AudioContext)

- Server IP origin instead of residential connection

- Timing patterns without natural human variance

Bottom line: Reconfiguring credentials won't fix detection issues. API-based automation is inherently detectable in 2026, regardless of how you configure it.

How to Fix 403 Forbidden—BrowserAct

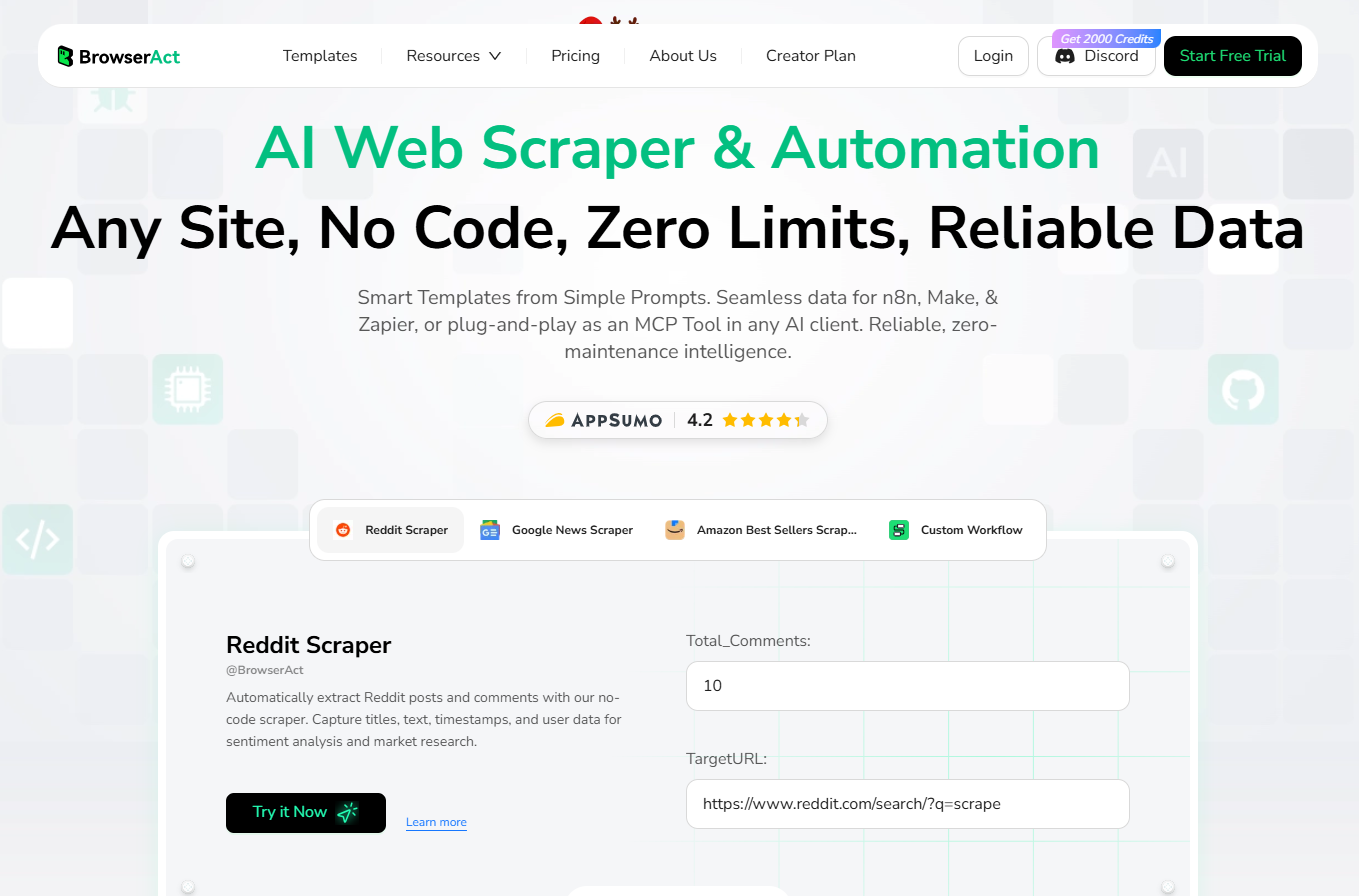

What is BrowserAct?

BrowserAct is an AI-powered web scraper that leverages fingerprint browser technology to bypass bot detection and pass human verification systems—making it exceptionally effective at solving 403 forbidden errors. You control it through natural language commands without writing any code: simply describe what data you want to extract, and BrowserAct's AI handles the technical execution. When integrated with n8n, you call BrowserAct through its API node, and it performs the actual scraping using fingerprint browsers that appear as genuine human visitors.

Core Capabilities:

- Fingerprint browser automation – Uses real browsers with unique fingerprints to interact with Reddit

- AI-powered navigation – Natural language workflow creation

- Anti-detection built-in – Mimics human behavior, bypasses CAPTCHAs

- Global residential IPs – Global countries with authentic residential connections

- 2FA support – Handles two-factor authentication automatically

- n8n integration – Native node for seamless workflow integration

- 98%+ success rate – Adapts to layout changes automatically

How BrowserAct Solves "Reddit 403 Blocked"

❌ n8n HTTP Request (Traditional):

n8n → Reddit API → 403 Forbidden (detected as bot)

✅ n8n + BrowserAct:

n8n → BrowserAct API → Real Browser → Reddit → Success

BrowserAct doesn't try to look like a browser—it is a browser. This means:

- Full JavaScript execution with real browser fingerprints

- Authentic cookie management and session persistence

- Natural timing with human-like variance

- Residential IP addresses instead of data center IPs

- Automatic CAPTCHA solving when needed

How to Use BrowserAct with n8n

Step 1: Set Up BrowserAct

- Visit browseract.com and create an account

- You'll receive 100 free credits daily to test (no credit card required)

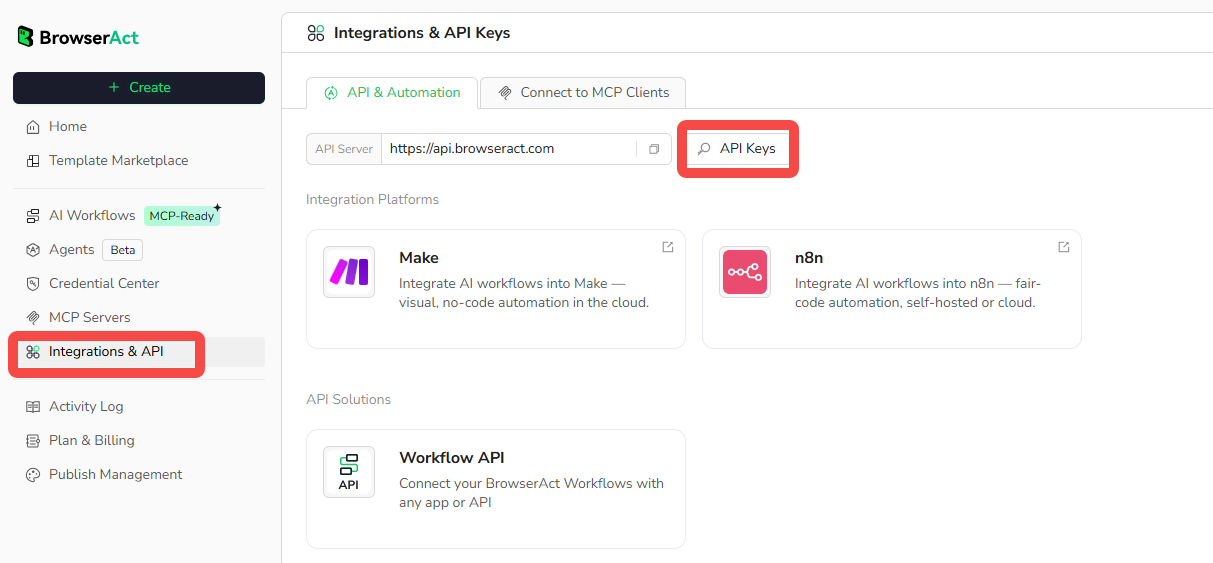

- Navigate to Settings → Integrations & API

- Click "Generate API Key" and copy it

Step 2: Use the Reddit Scraper Template

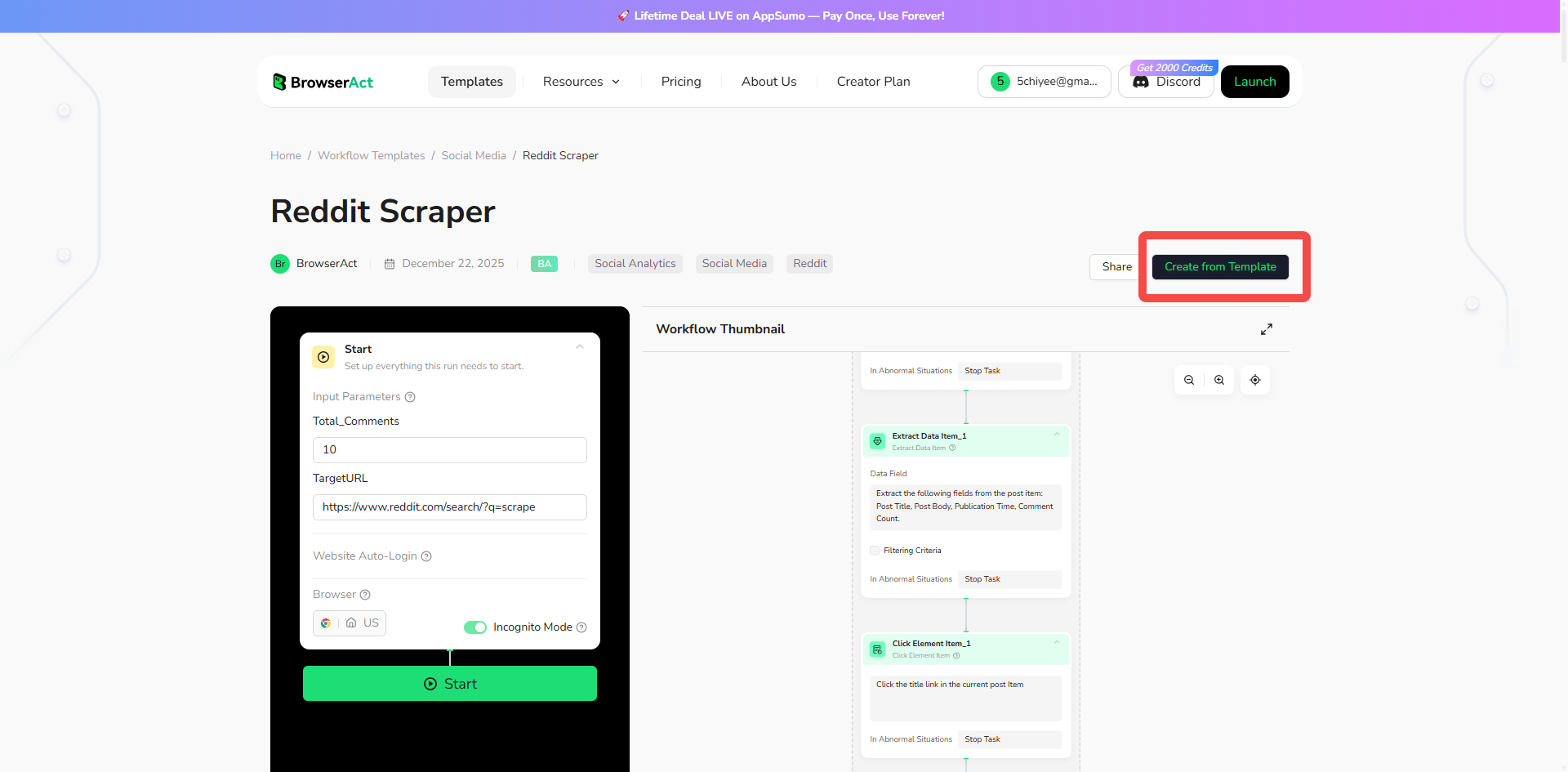

BrowserAct provides a ready-made template for Reddit scraping:

- Access the Reddit Scraper

- Click "Create from Template" to add it to your workflows

- Customize the default parameters (or adjust the scraper to fit your needs):

- Target URL: The Reddit URL you want to scrape (e.g., search results, subreddit page)

- Total_Comments: Number of comments to extract per post

- You can modify the template to add filters, extract specific data fields, or change the scraping logic

- Publish the workflow

Cost estimate: Reddit scraping typically uses 80-150 credits per run, depending on how many posts you're extracting.

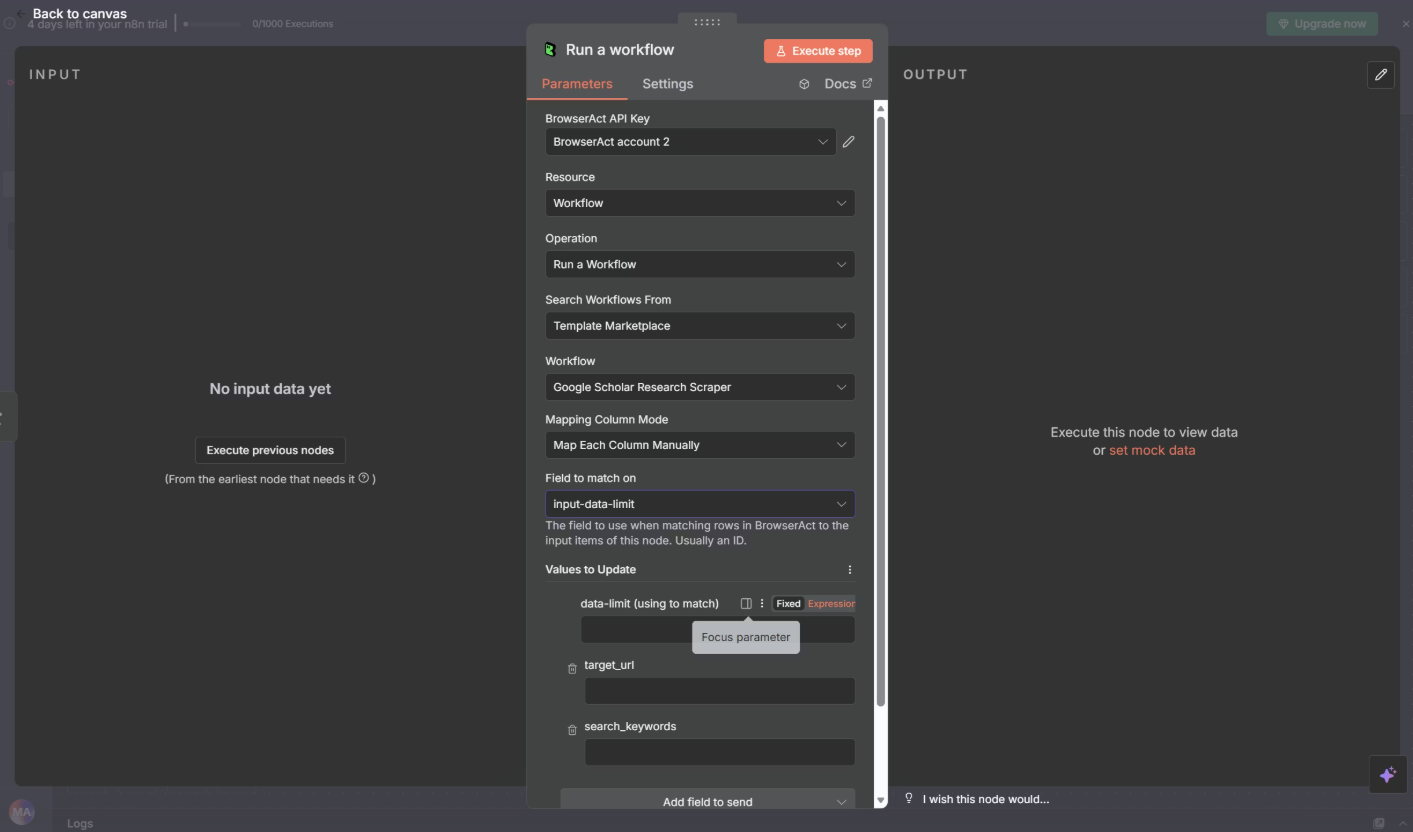

Step 3: Integrate with n8n

Add the BrowserAct Node:

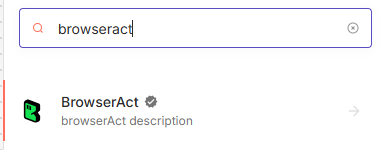

- Open your n8n workflow

- Click "+" to add a new node

- Search for "BrowserAct" and select it

- In Credentials, click "Create New"

- Paste your API key and save

- Test the connection

Configure the Workflow:

- Set Resource: "Workflow"

- Set Operation: "Run a workflow"

- Select your published Reddit workflow

- Map input parameters from previous nodes

- Test execution

Add Downstream Processing:

Connect the BrowserAct node output to:

- Google Sheets (log results)

- Slack (notifications)

- Email (reports)

- Conditional nodes (filter results)

Ready to Eliminate 403 Errors?

Start automating Reddit with BrowserAct today:

- Sign up at browseract.com (100 free credits daily)

- Use the template – Reddit scraper ready in minutes

- Connect to n8n – Replace failing HTTP nodes

- Run reliably – 98%+ success rate guaranteed

Get Started Now – No credit card required for testing.

Get Started Now – No credit card required for testing.

Solution Comparison

How Different Approaches Stack Up

When facing Reddit 403 errors, developers typically try several methods. Here's how they compare:

Method | Success Rate | Setup Time | Monthly Cost | Maintenance | Bypasses 403? |

Reconfigure OAuth | 10-20% | 30 mins | $0 | High (constant debugging) | ❌ No |

Add Delays/Rate Limiting | 30-40% | 1 hour | $0 | Medium | ❌ Rarely |

Proxy Rotation | 40-50% | 4-8 hours | $50-200 (proxies) | High | ⚠️ Sometimes |

Self-built Puppeteer | 50-70% | 20-40 hours | $20-100 (hosting) | Very High | ⚠️ Sometimes |

BrowserAct | 98%+ | 5 mins | $13+ | None | ✅ Yes |

Capability Comparison

Capability | n8n HTTP Node | Puppeteer Script | BrowserAct |

No Coding Required | ✅ Yes | ❌ No | ✅ Yes |

Fingerprint Browsers | ❌ No | ⚠️ Manual setup | ✅ Built-in |

CAPTCHA Handling | ❌ No | ⚠️ Need 3rd party | ✅ Automatic |

Residential IPs | ❌ No | ⚠️ Extra cost | ✅ Included |

2FA Support | ❌ No | ⚠️ Complex | ✅ Automatic |

Natural Language Control | ❌ No | ❌ No | ✅ Yes |

n8n Integration | Native | Custom code | ✅ Native node |

Adapts to Layout Changes | ❌ No | ❌ Breaks | ✅ AI adapts |

Pay-as-you-go | N/A | N/A | ✅ Yes |

Best Practices & Optimization

Automate Responsibly

- Respect Reddit's ToS

- Don't spam communities

- Don't manipulate votes

- Don't evade bans

- Disclose automation when asked

- Add Genuine Value

- Personalize comments with variables

- Provide context-aware responses

- Share helpful content, not just links

- Mimic Human Patterns

- Vary active hours (don't post at exact times)

- Random delays between actions (5-15 minutes)

- Don't reply to every match (70-80% natural)

- Mix automated with manual activity

Common Mistakes to Avoid

❌ Over-Automation

- Bad: 50 posts/day from new account

- Good: 5-10 thoughtful replies/day

❌ Identical Comments

- Bad: Same text everywhere

- Good: Templates with variables for {author}, {topic}, {resource}

❌ Predictable Timing

- Bad: Exactly 2:00 PM daily

- Good: Random times, skip some days

❌ No Monitoring

- Bad: Set and forget

- Good: Daily checks, failure alerts, pause on warnings

Conclusion

The 403 forbidden error when scraping Reddit isn't a credential problem—it's a fundamental limitation of API-based approaches. In 2026, Reddit's sophisticated bot detection systems can identify and block traditional API scraping requests, regardless of proper OAuth authentication. That's why reconfiguring credentials or switching between script and web app types doesn't solve the issue.

BrowserAct eliminates this problem by replacing API calls with real browser automation. When you integrate BrowserAct into your n8n workflows for data extraction, Reddit sees genuine browser traffic with authentic fingerprints, residential IPs, and human-like behavior patterns. This isn't about bypassing detection—it's about making detection irrelevant through authentic browser interactions that look exactly like a human browsing Reddit.

The results speak for themselves: 98%+ success rate and zero maintenance. Compare that to manual work at $1,000/month or unreliable API methods that waste your time debugging 403 errors.

Start Fixing Your Reddit 403 Errors Today

Don't waste another hour debugging API credentials or reading Reddit's OAuth documentation. The solution is simpler than you think:

- Sign up at browseract.com

- Get 100 free credits daily to test (no credit card)

- Use the Reddit Scraper template – working in under 5 minutes

- Connect to your n8n workflow – replace HTTP nodes

- Run reliably with 98%+ success rate

👉 Get Started Now – Fix Your 403 Errors in Minutes

Join thousands of developers who've already solved the Reddit automation puzzle. Stop fighting bot detection and start getting results.

Relative Resources

How to Fix "429 Too Many Requests" Error in n8n Workflows

How to Automate TikTok Scraping Without Apify (n8n Guide)

How to Make Money with n8n Workflow Automation 2026

n8n Reddit Automation in 9 Steps: Stop Manual Browsing

Latest Resources

20 Best Claude Skills in 2026: The List That Actually Helps

Why 99% of n8n Workflows Fail to Make Money (15 Lessons)

10 Killer AI Agent Skills That Are Dominating GitHub Now