Top 10 Reddit Scraper Tools for Data Extraction in 2026

Top 10 Reddit scrapers in 2025: 1. BrowserAct Reddit Scraper 2. ScraperAPI 3. Scrapy 4. Apify 5. Octoparse 6. Axiom 7. HasData 8. Bright Data 9. ZenRows 10. Pushshift

Reddit hosts millions of discussions on topics ranging from emerging tech trends to specialized hobbies, making it a valuable source of real-time insights for businesses, researchers, and enthusiasts. However, sifting through this content manually—scrolling through subreddits, threads, and comments—can quickly become time-consuming and inefficient, especially when dealing with large volumes of data. That's where dedicated tools come in, automating the process of collecting posts, comments, and metadata to help users focus on analysis rather than extraction.

These Reddit scraping tools are useful for tasks such as market research to track consumer opinions, sentiment analysis to measure reactions to events, content curation for reports, and academic studies on online communities. For example, a marketer could identify popular topics, or a researcher could examine discussion trends. Always use them responsibly: follow Reddit's API terms, respect user privacy, and comply with data laws like GDPR or CCPA to avoid misuse.

This post lists the top 10 Reddit data extraction tools for 2025, ranked by ease of use, features, and popularity based on recent search trends and user reviews. It includes no-code options for beginners and code-based ones for developers. At the top is BrowserAct Reddit Scraper, known for its simple interface and strong performance.

Before diving into the detailed reviews, here's a quick comparison table to help you find the best fit at a glance.

Comparison Table of Top Reddit Scrapers

To help you choose the best tool, here's a side-by-side comparison of the top 10 Reddit scrapers based on key factors like ease of use, primary strength, pricing, and ideal users. This table highlights the diversity from no-code options to developer-focused APIs, ranked as per our list.

Rank & Tool | Ease of Use | Key Strength | Pricing | Best For |

#1 BrowserAct | No-code (visual template) | Easy customization, IP management, exports to CSV/JSON | Free trial; pay-as-you-go; LTD on AppSumo | Beginners & marketers |

#2 ScraperAPI | Code-based (API) | Anti-bot bypass, proxy rotation, JavaScript rendering | Free trial; from $49/month | Developers for scalable, reliable extraction |

#3 Scrapy | Code-based (Python framework) | Asynchronous crawling, extensible for complex setups | Free/open-source | Technical users for scalable scraping |

#4 Apify | Hybrid (cloud actor with code options) | Cloud runs, anti-blocking, developer API integration | $45/month + usage; free trial | Data analysts for large projects |

#5 Octoparse | No-code (drag-and-drop) | Batch URL processing, free templates for posts/comments | Freemium; from $75/month | Business users for quick extractions |

#6 Axiom | No-code (Chrome extension) | Bot building, scheduling, Google Sheets integration | Free plan; from $15/month | Non-technical teams for automation |

#7 HasData | API-based (HTTP requests) | Real-time API, proxy rotation, custom queries | Pay-per-result from $0.001; from $49/month | Developers integrating into apps |

#8 Bright Data | API-based (enterprise) | Posts/Comments APIs, robust proxies for scale | From $0.75/1k requests; custom from $400/month | Big data teams for high-volume |

#9 ZenRows | API-based (headless browser) | Anti-bot bypassing, JSON outputs, pagination | Free tier; from $69/month | Tech teams for programmable access |

#10 Pushshift | API-based (archives) | Historical data dumps, advanced queries | Free with limits | Researchers for bulk historical data |

This comparison shows a mix of free/open-source options for coders (like PRAW and Scrapy) and user-friendly no-code tools (like BrowserAct and Octoparse), with enterprise picks for scale (e.g., Bright Data). Consider your technical skills and data needs—beginners often prefer no-code for speed, while devs opt for customizable APIs.

Ready to get started with the top pick? Try BrowserAct Reddit Scraper for free today and automate your data extraction effortlessly!

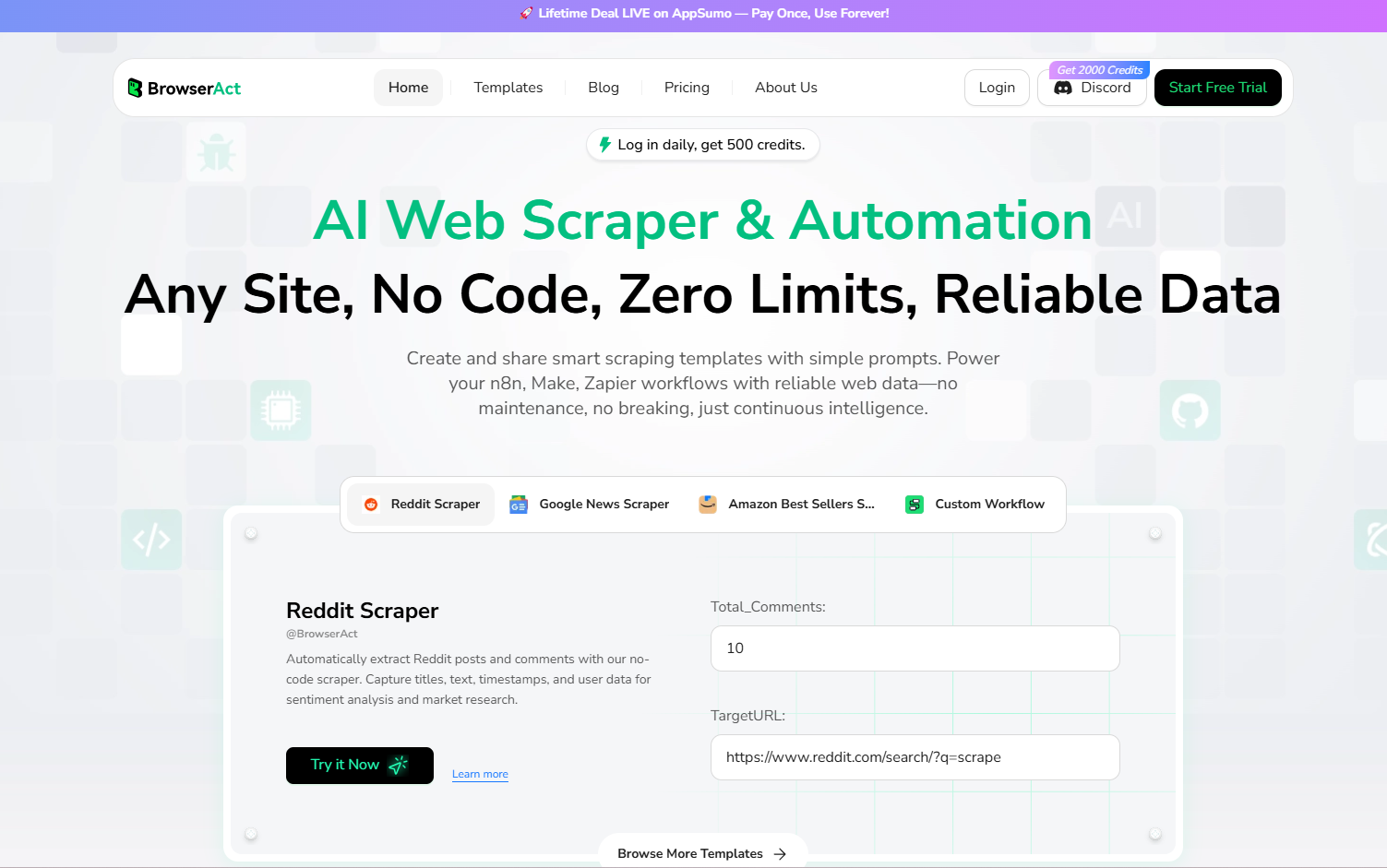

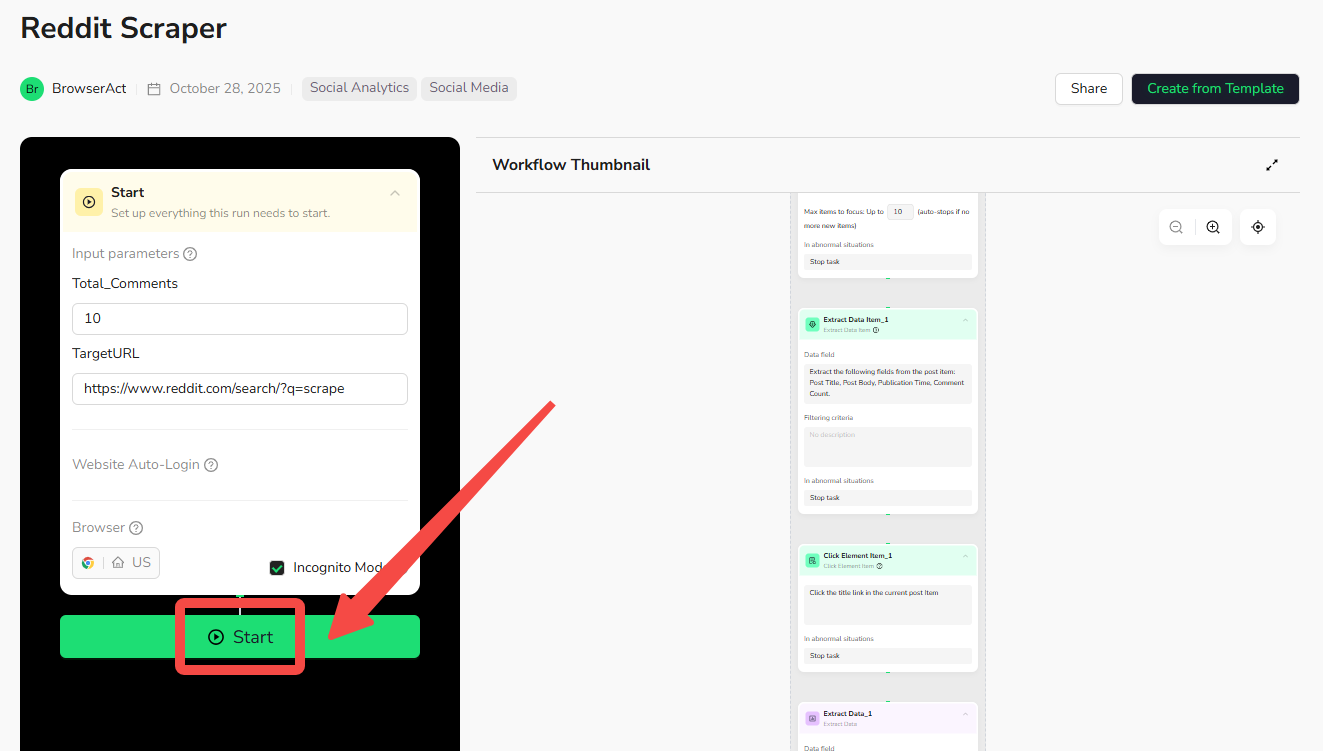

#1: BrowserAct Reddit Scraper

This no-code web scraping template from BrowserAct uses browser automation to extract Reddit posts and comments efficiently, without requiring any programming. It allows users to input a Reddit URL, then automatically collects data like post details and nested comments, with options for cloud-based or local execution. Designed for quick deployment, it's ideal for tasks like market research or content analysis, and it supports free trials for testing.

Key Features

- Free and Simple Setup: This Reddit scraper is free to use right in your browser, with no installation needed and zero technical skills required—just fill out a form to get started, avoiding any coding complexities.

- Reliable Extraction with IP Management: Built-in IP rotation prevents blocked by network security, ensuring stable runs that capture full, accurate data without interruptions or missing elements like posts or comments.

- Comprehensive Post and Comment Capture: Automatically gathers post details (e.g., title, score) and nested comments (e.g., text, author), using dual-level extraction to maintain full context and hierarchical relationships.

- Customizable Parameters and Selection: Easily adjust settings like Total_Comments for depth (e.g., 10–50 per post) or max loop items for post counts (e.g., 5–10+), supporting searches, subreddits, and trending pages.

- Flexible Tweaks Without Code: Start with the ready template and modify scraping steps to save specific data or add actions, allowing easy personalization to match your needs.

- Integration with Automation Tools: Seamlessly connects to platforms like Make.com and n8n for scheduling (e.g., daily runs) and automatic data saving to Google Sheets or similar apps.

- Versatile Export Options: Save collected data in user-friendly formats like CSV for spreadsheets or JSON for analysis, making it simple to integrate with other software.

Pros and Cons

- Pros: Easy no-code setup with a visual interface; free to start with pay-as-you-go options; reliable for structured post and comment extraction; integrates with BrowserAct's ecosystem.

- Cons: Dependent on Reddit's site changes.

Best For

Beginners, marketers, or researchers needing simple, automated Reddit data collection for insights without coding expertise.

Pricing

Free trial available with 500 credits, daily login rewards of 100 credits, and a 100-credit bonus for joining Discord; pay-as-you-go model where you buy credits as needed; alternatively, purchase a lifetime deal (LTD) on AppSumo.

Ready to automate your Reddit data extraction? Try BrowserAct Reddit Scraper for free today and start pulling insights effortlessly—no coding required!

#2: ScraperAPI

ScraperAPI is a leading web scraping API designed for high-volume, enterprise-grade data extraction, serving businesses of all sizes from startups to large corporations. It eliminates the technical headaches of Reddit scraping by automatically managing IP rotation, CAPTCHA solving, and JavaScript rendering. By routing requests through a single API endpoint, it allows technical teams to focus entirely on parsing data while ensuring ethical, GDPR, and CCPA-compliant collection practices.

Key Features

Key Features

- AI-Powered Proxy Management: Automatically rotates through a vast, geo-targeted proxy network to bypass Reddit’s rate limits and IP blocks consistently.

- Real-time CAPTCHA & Anti-Bot Bypass: Uses advanced logic to solve CAPTCHAs and manage browser fingerprinting, ensuring uninterrupted access to protected community pages.

- JavaScript Rendering: Effortlessly renders dynamic content to capture nested comments and asynchronously loaded posts with a simple URL parameter.

- Built for Enterprise Scale: Handles massive asynchronous workloads with high concurrency and near-perfect success rates for mission-critical data needs.

Pros and Cons

- Pros: Simplifies scalable scraping by abstracting all anti-bot complexity; ethical and compliant data collection; pay only for successful requests.

- Cons: Requires basic API integration knowledge; cost scales with high-volume credit usage.

Best For

Developers and businesses—from startups to enterprises—who need a highly reliable, programmable web scraping tool to extract large volumes of Reddit data without managing infrastructure.

Pricing

Free tier available (5,000 credits); paid plans start at $49/month for 100,000 credits, with enterprise-level tiers for high-concurrency needs.

#3: Scrapy

Scrapy is an open-source Python framework designed for web crawling and data extraction, which can be easily configured to scrape Reddit by creating custom "spiders" that navigate pages, follow links, and pull content like posts and comments dynamically.

Key Features

- Asynchronous Processing: Handles multiple requests concurrently using Twisted, enabling fast and efficient crawling of large sites like Reddit without blocking.

- Selector Support: Built-in tools for extracting data with XPath or CSS selectors, making it simple to target specific elements such as post titles, comments, or metadata.

- Extensible Architecture: Allows customization through middlewares, pipelines, and items for processing data, handling pagination, or integrating with databases.

Pros and Cons

- Pros: Highly efficient for large-scale scraping; free and open-source with a strong community; flexible for complex, custom Reddit configurations.

- Cons: Requires Python coding knowledge and setup time; no built-in no-code interface; can be resource-intensive for very large jobs.

Best For

Developers or technical users building scalable, custom web scraping solutions for Reddit data.

Pricing

Free and open-source.

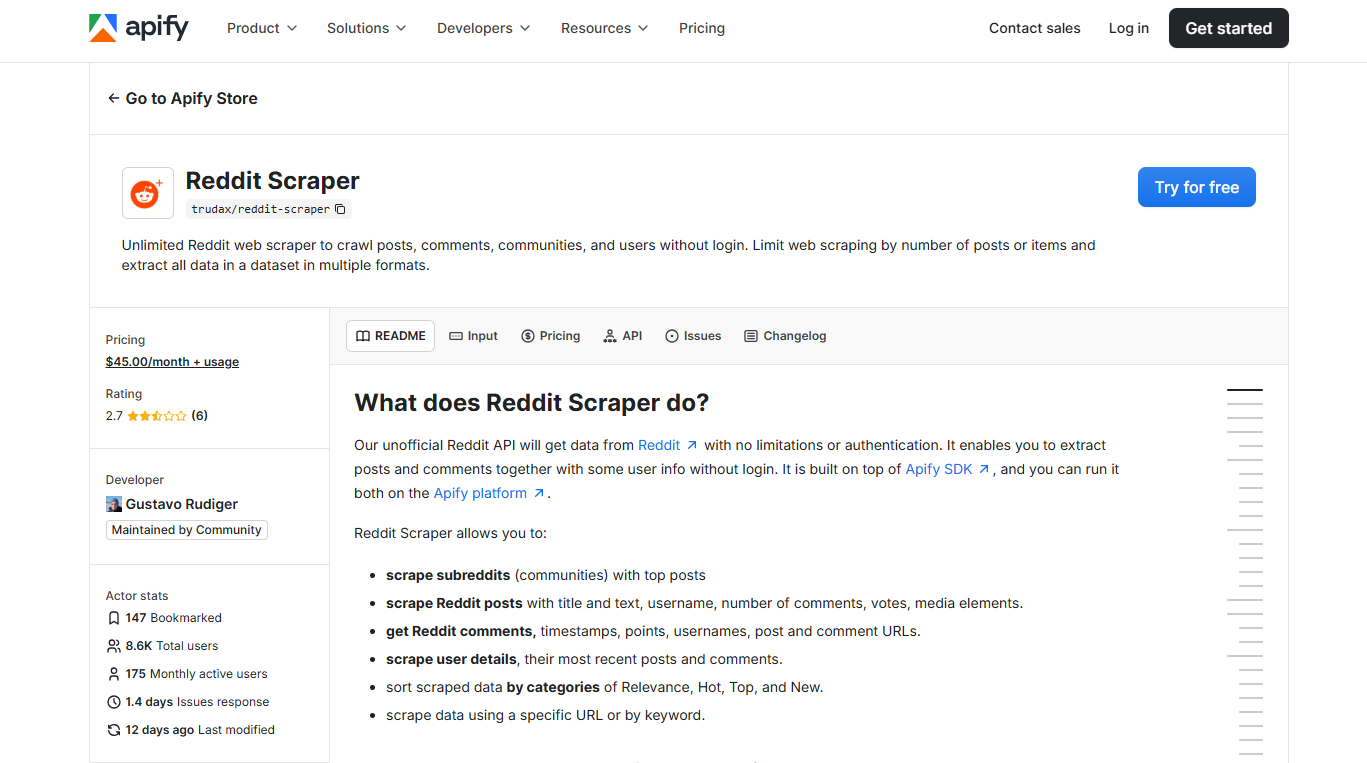

#4: Apify Reddit Scraper

Apify Reddit Scraper is a pre-built, cloud-based scraping tool designed for extracting data from Reddit, suitable for developers and large-scale projects. It allows users to input URLs or keywords to gather posts, comments, and other details, with flexible sorting and filtering options. Running on Apify's servers, it handles heavy workloads efficiently and integrates with code for customization, making it ideal for automated data collection in research, analysis, or integration workflows.

Key Features

- Flexible Scraping Inputs: Scrape by providing direct URLs for posts, users, or communities, or use keywords for searches sorted by relevance, hot, top, new, or comments, with time filters like hour, day, week, month, or year.

- Cloud-Based Execution with Anti-Blocking: Runs on Apify's servers to handle large jobs without local resource strain, including proxy support and features to avoid blocks for reliable data extraction.

- Customizable Limits and Developer Integration: Set limits on max items (e.g., posts, comments, communities) via JSON parameters, with API access, libraries for Python/Node.js, and extendable output via custom JavaScript for additional data fields.

Pros and Cons

- Pros: Powerful cloud-based execution for scalability; extensive customization via API and code; supports large datasets; developer-friendly libraries and integrations.

- Cons: Requires some technical knowledge for advanced use; pay-per-use can add up for frequent large jobs; potential learning curve for non-developers.

Best For

Developers, data analysts, or teams handling large-scale Reddit data extraction for projects requiring customization and integration.

Pricing

$45.00/month + usage-based costs; free trial available for testing with limited runs.

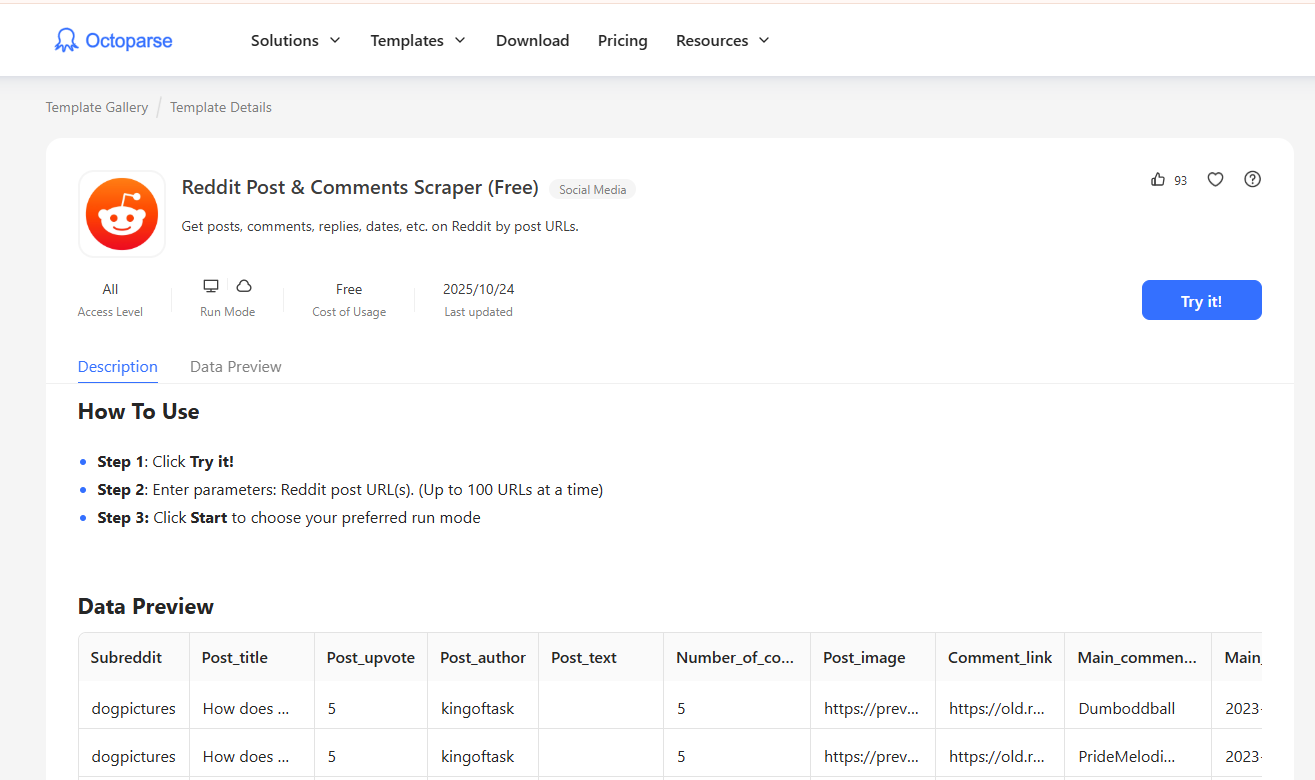

#5: Octoparse Reddit Post & Comments Scraper

A visual no-code platform that turns websites like Reddit into structured data sources through drag-and-drop workflows.

Key Features

- Pre-built Free Template: Ready-to-use scraper for extracting Reddit post and comment data (e.g., titles, upvotes, authors, text, images, timestamps, and nested replies) without coding, accessible directly via browser.

- Batch URL Input: Supports entering up to 100 Reddit post URLs at once for efficient scraping of multiple threads, with options to choose run modes like local or cloud execution.

- Structured Data Output: Provides detailed previews and exports of scraped data, including subreddit details, comment links, and hierarchical replies, in formats suitable for analysis.

Pros and Cons

- Pros: User-friendly no-code interface with free templates; supports batch processing and nested data extraction; easy setup for non-technical users.

- Cons: Limited to predefined templates without advanced customization; may require paid plans for unlimited runs or cloud features; dependent on site structure stability.

Best For

Business users seeking quick, visual tools for extracting structured Reddit data for marketing or research.

Pricing

Freemium model with free templates and basic usage; paid plans start from $75/month for advanced features and unlimited tasks.

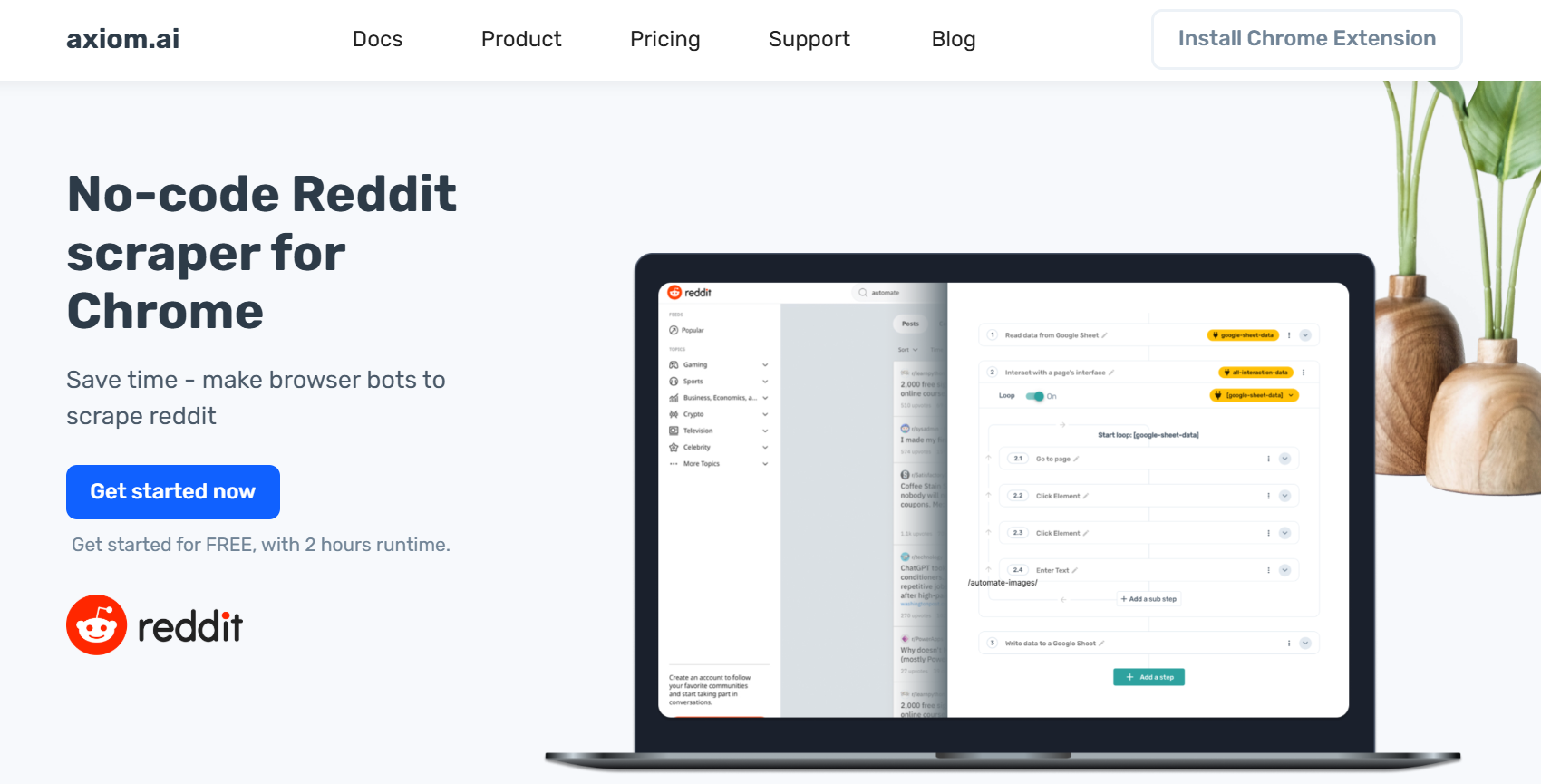

#6: Axiom Reddit Scraper

Axiom.ai offers a no-code browser automation tool that allows users to scrape Reddit data like posts, comments, and metadata directly from subreddits or search results, using a simple drag-and-drop interface in a Chrome extension for quick setups without programming.

Key Features

- No-Code Bot Building: Create automated workflows via a visual builder to navigate Reddit pages, loop through posts or comments, and extract elements like titles, authors, upvotes, timestamps, and links without writing code.

- Data Extraction and Export: Select and pull structured data from Reddit (e.g., post content, user details, comment threads) and export directly to Google Sheets, CSV, or other integrations for easy analysis.

- Scheduling and Cloud Runs: Set up bots to run on schedules or in the cloud, with built-in handling for pagination and dynamic content to ensure complete data collection.

Pros and Cons

- Pros: Completely no-code with an intuitive Chrome extension; fast setup for beginners; integrates seamlessly with tools like Google Sheets; free tier available for testing.

- Cons: Limited to browser-based automation (requires Chrome); advanced customizations may need paid features; dependent on Reddit's layout changes.

Best For

Non-technical users or small teams needing quick, automated Reddit data extraction for monitoring trends or research without coding expertise.

Pricing

Free plan with basic features and limited runs; paid plans start from $15/month for unlimited bots and advanced automation.

#7: HasData Reddit Scraper

HasData provides a Reddit scraper API that allows users to extract structured data from Reddit posts, comments, subreddits, and user profiles via simple HTTP requests, designed for developers and businesses needing scalable, real-time data without building scrapers from scratch.

Key Features

- API-Based Extraction: Fetch detailed data like post titles, content, upvotes, authors, timestamps, and comments using endpoints for subreddits, searches, or specific URLs, with support for pagination and filtering.

- Scalable and Reliable: Handles large volumes with built-in proxy rotation and rate limit management to avoid blocks, delivering data in JSON format for easy integration into apps or databases.

- Custom Query Options: Search by keywords, sort results (e.g., hot, new, top), and limit outputs by date ranges or item counts, including extraction of media links and user metadata.

Pros and Cons

- Pros: Easy API integration with no infrastructure setup; cost-effective pay-per-result pricing; reliable for high-volume queries with minimal downtime.

- Cons: Requires API keys and some development knowledge; limited to predefined data fields without custom requests; potential additional costs for heavy usage.

Best For

Developers and teams integrating Reddit data into applications, analytics, or monitoring tools for real-time insights.

Pricing

Pay-per-result starting at $0.001 per record; free trial with 1,000 credits; subscription plans from $49/month for higher volumes.

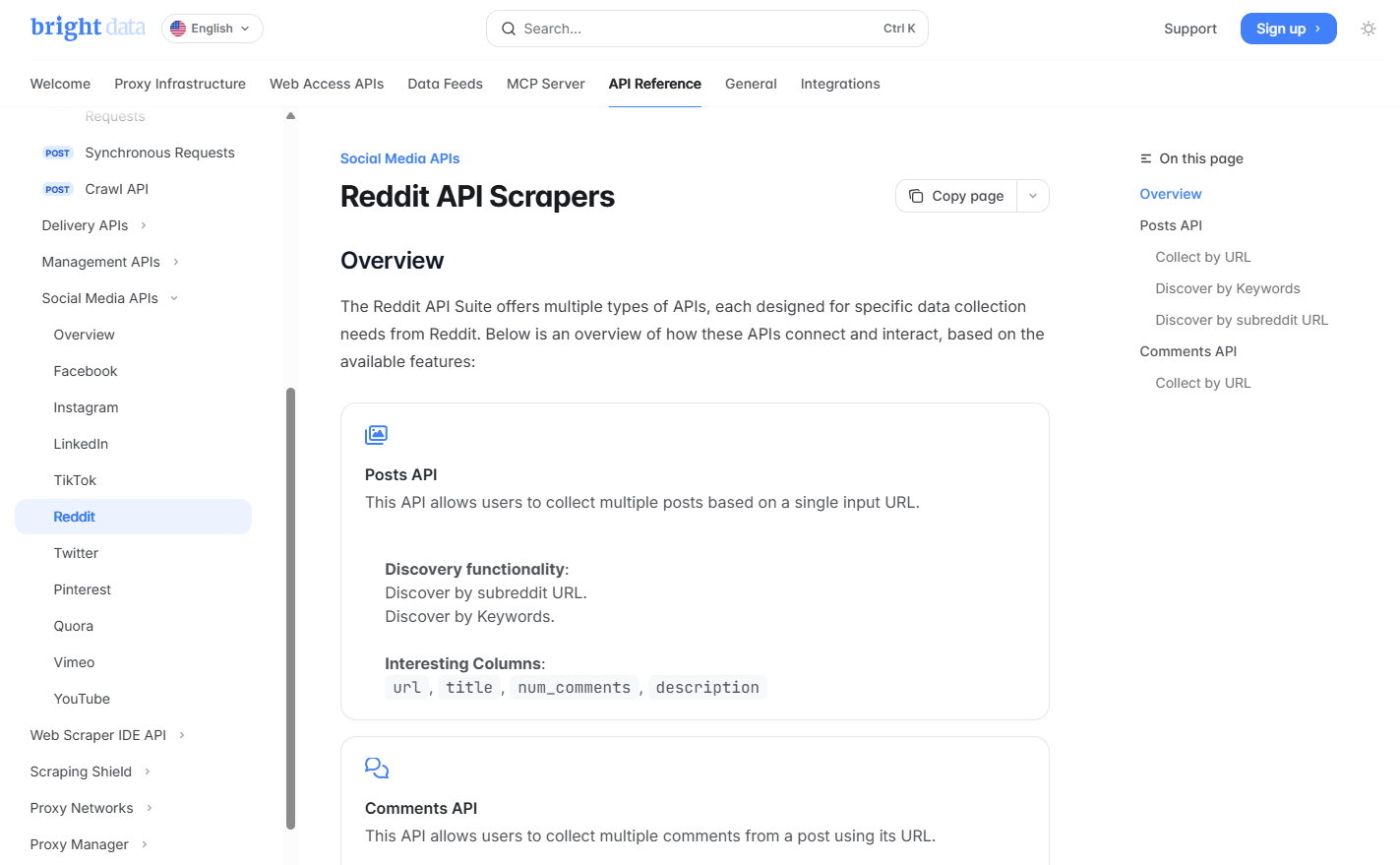

#8: Bright Data Reddit API Scraper

An enterprise-grade solution with proxy networks to bypass restrictions, focusing on large-scale Reddit data collection.

Key Features

- Posts API for Discovery and Collection: Retrieve detailed post data by URL, keywords (with date filtering and post limits), or subreddit URL (with sorting like new, top, or hot), including titles, descriptions, comments, media, and engagement metrics.

- Comments API for In-Depth Extraction: Collect comments from a specific post or comment URL, with options to filter by days back, capturing details like comment text, user info, replies, upvotes, and community attributes.

- Comprehensive Output with Integration: Provides structured data on posts, comments, users, and communities (e.g., num_upvotes, community_members_num), integrated with Bright Data's proxy and unlocking features for reliable, high-volume scraping.

Pros and Cons

- Pros: Robust proxy networks for bypassing blocks; supports large-scale, detailed data retrieval via APIs; ideal for enterprise-level analysis with rich metrics and media extraction.

- Cons: Geared toward advanced users with API integration needs; pricing can be high for small-scale use; requires authentication and setup for full functionality.

Best For

Big data teams handling extensive Reddit data collection for analytics, monitoring, or research.

Pricing

Pay-per-use starting from $0.75 per 1,000 requests for scrapers; custom scraper plans from $400/month.

#9: ZenRows Reddit Scraper

ZenRows provides a scraper API that uses headless browser technology and anti-bot measures to extract Reddit data like posts, comments, and metadata without getting blocked, offering simple integration via HTTP requests for developers and automated workflows.

Key Features

- Anti-Blocking Capabilities: Built-in proxy rotation, CAPTCHA solving, and fingerprinting to bypass Reddit's restrictions, ensuring high success rates for large-scale scraping.

- Structured Data Extraction: Pulls detailed Reddit elements such as post titles, content, authors, upvotes, timestamps, comments, and media links in clean JSON format via easy API calls.

- Customizable Requests: Supports parameters for targeting subreddits, searches, or specific URLs, with options for pagination, JavaScript rendering, and concurrency for efficient data retrieval.

Pros and Cons

- Pros: Reliable bypassing of anti-scraping measures; developer-friendly API with fast response times; scalable for high-volume needs with minimal setup.

- Cons: Requires API integration knowledge; no no-code interface; costs can accumulate for extensive usage beyond free limits.

Best For

Developers or tech teams needing robust, programmable access to Reddit data for applications, monitoring, or analysis without handling infrastructure.

Pricing

Free tier with 1,000 basic results; paid plans start from $69/month for 250,000 basic results.

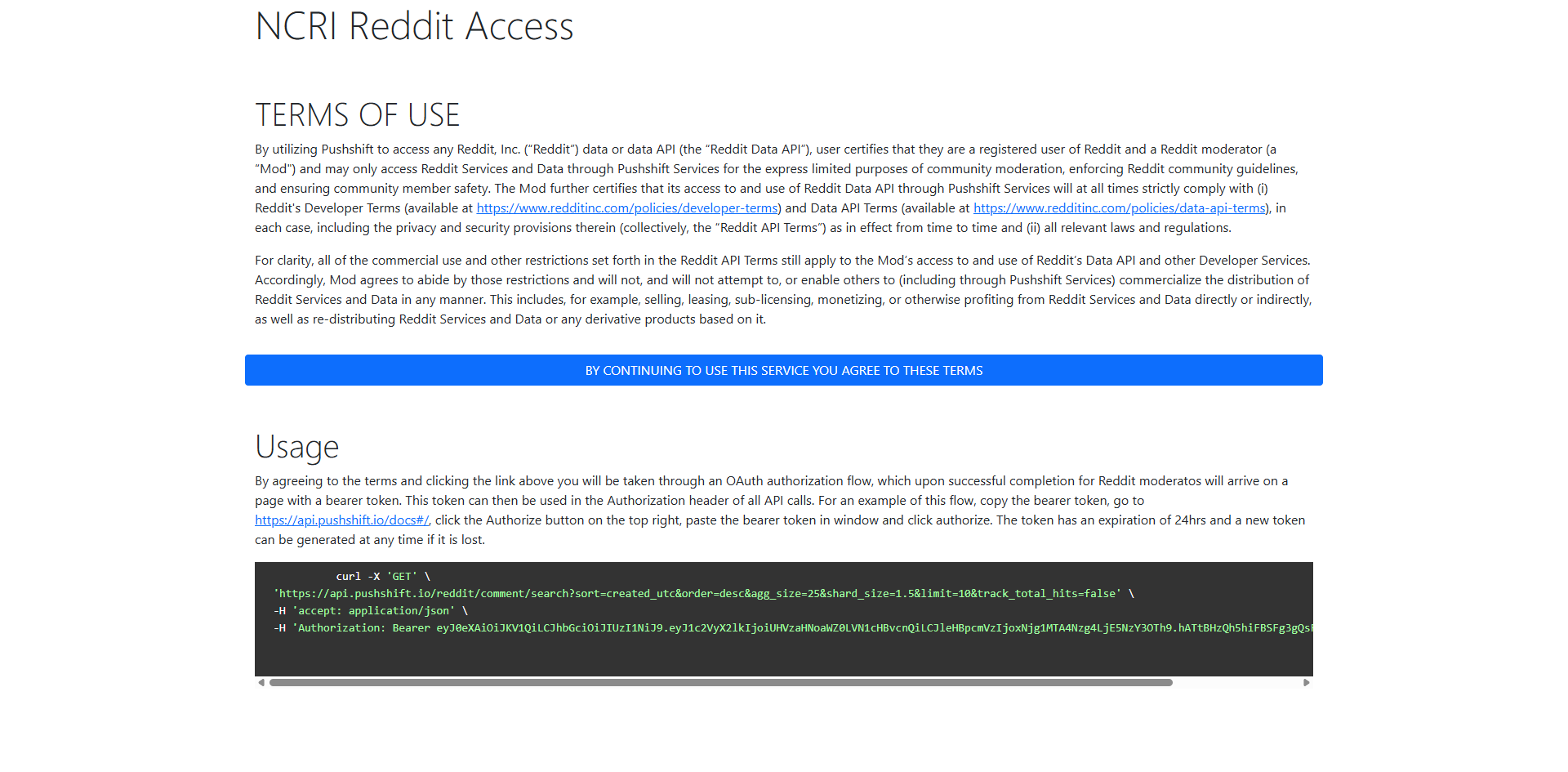

#10: Pushshift.io

Pushshift.io is a community-driven service that archives vast amounts of historical Reddit data and provides API access for advanced searches, making it a popular choice for bulk retrieval of past posts and comments that Reddit's official API may not cover easily. (Note: Due to Reddit's 2023 API changes, real-time data ingestion has stopped, but historical archives remain accessible via queries or dumps.)

Key Features

- Historical Data Archiving: Maintains a large database of Reddit submissions and comments dating back years, allowing users to query and retrieve archived content not readily available through official channels.

- Advanced API Queries: Supports detailed searches by parameters like subreddit, keyword, date range, or author, with endpoints for aggregations (e.g., comment counts or trends) and metadata extraction.

- Bulk Data Dumps: Offers downloadable monthly dumps of Reddit data in formats like JSON or via BigQuery integration, ideal for large-scale analysis without repeated API calls.

Pros and Cons

- Pros: Free access to extensive historical archives; powerful for research and data mining; community-supported with open-source tools.

- Cons: No longer ingests new data post-2023 Reddit changes; API rate limits and occasional downtime; requires technical setup for effective use.

Best For

Researchers, data scientists, or analysts needing bulk access to historical Reddit data for studies or trend analysis.

Pricing

Free with API limits (e.g., rate throttling and query restrictions).

Conclusion

In this roundup of the top 10 Reddit data extraction tools for 2025, we've explored a range of options from no-code templates like BrowserAct to enterprise APIs like Bright Data. Whether you're a beginner automating simple pulls or a developer building scalable workflows, these tools make it easier to harness Reddit's vast discussions for market research, sentiment analysis, and more. By automating the collection of posts, comments, and metadata from Reddit's web pages, you can unlock valuable insights into trends, opinions, and community dynamics without manual effort.

Remember, ethical use is key—focus on publicly available data and always comply with Reddit's terms of service to avoid violations. Respect user privacy, adhere to data laws, and use proxies or APIs responsibly to ensure sustainable scraping. With the right tool, Reddit becomes a powerful resource for informed decision-making.

Ready to dive in? Start with the top-ranked BrowserAct Reddit Scraper today for effortless, no-code extraction—try it for free and transform your data workflow!

Relative Resources

Best 15 Amazon Scrapers to Collect Product Data in 2025

![Top 10 Best Google News Scraper Tools [2025 Update]](/_next/image?url=https%3A%2F%2Fbrowseract-prod.browseract.com%2Fblog%2Fcontent%2Fcover-1762419272762.jpeg&w=640&q=100)

Top 10 Best Google News Scraper Tools [2025 Update]

Which Reddit Scraper is Best for You? BrowserAct vs Apify

Web Scraping vs. Web Crawling: Key Differences & 15 Tools

Latest Resources

Moltbook: Where 150K AI Agents Talk Behind Our Backs

How to Bypass CAPTCHA in 2026: Complete Guide & Solutions

Moltbot (Clawdbot) Security Guide for Self-Hosted AI Setup