How to Scrape Reddit: 5 Proven Methods for 2025

How to Scrape Reddit with 5 tools: 1. BrowserAct Reddit Scraper 2. Reddit's Official API 3. Python Libraries Like PRAW 4. BeautifulSoup or Scrapy 5. Selenium

Ever wondered how brands track viral trends on Reddit or researchers analyze discussions in subreddits like r/technology? Learning how to scrape Reddit can unlock a treasure trove of insights from one of the internet's largest communities. Reddit scraping involves automatically extracting data such as posts, comments, upvotes, timestamps, and metadata using tools or scripts—often called a Reddit scraper—instead of manual copying.

This technique is invaluable for market research, sentiment analysis, content curation, or building AI datasets. For example, you could scrape r/personalfinance to spot financial trends or gather data for machine learning projects. However, always prioritize ethics and legality: Follow Reddit's terms of service and API guidelines (reddit.com/dev/api), respect rate limits, and avoid scraping private data to prevent bans or legal issues.

In this guide, we'll explore how to scrape Reddit with 5 effective methods, from free APIs to no-code Reddit scrapers like BrowserAct. Let's get started!

Method 1: Using BrowserAct Reddit Scraper (No-Code Solution)

If you're searching for an easy Reddit scraper, BrowserAct simplifies how to scrape Reddit for beginners and pros alike. This method leverages ready-made tools for quick setup, highlighting BrowserAct's Reddit Scraper. As a no-code solution, it automatically extracts post titles, body text, publication times, comment counts, individual comment threads, commenter details, and timestamps from any subreddit or search results—without programming.

Steps to Scrape Reddit with BrowserAct Web Scraper

Follow this quick start guide to scrape Reddit effortlessly using the pre-built template:

- Register an Account: Create a free BrowserAct account to start a free trial.

- Select the Template: Go to the Reddit Scraper template and create from it for instant setup.

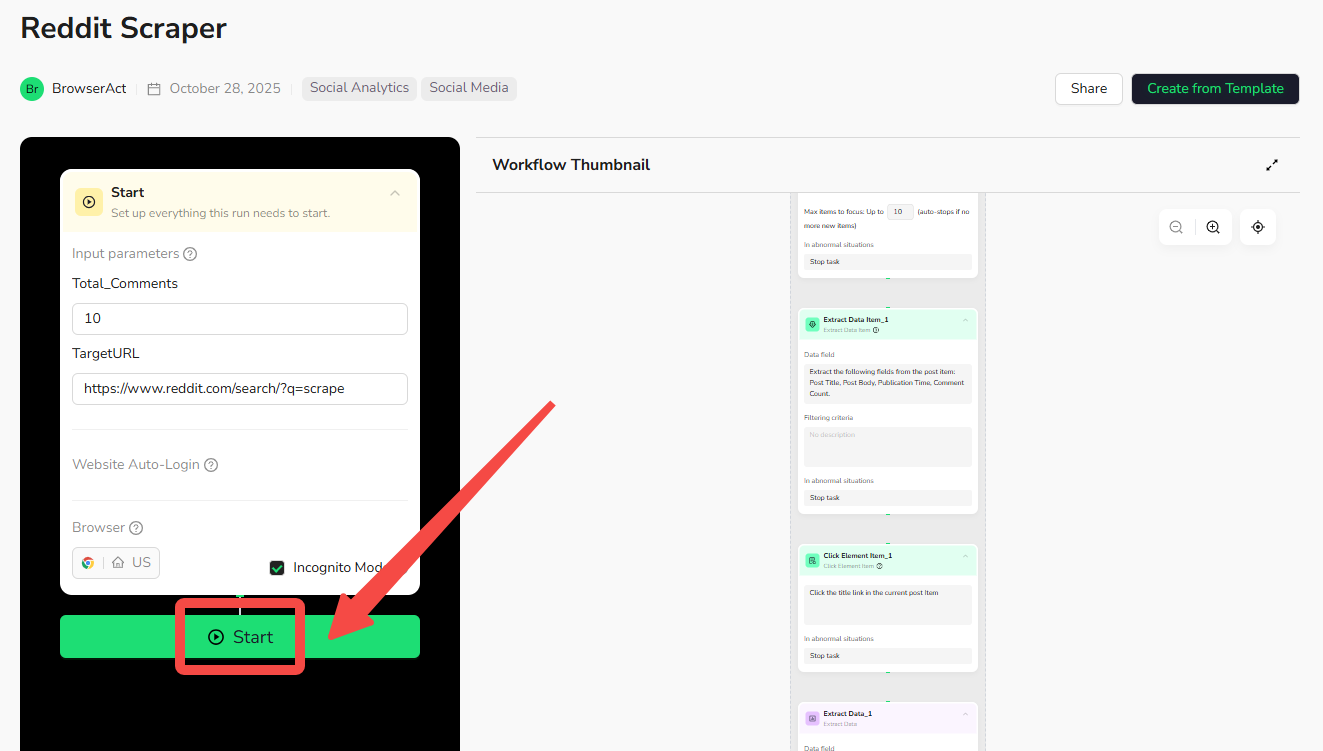

- Configure Parameters: Set TargetURL (e.g., "https://www.reddit.com/search/?q=scraper" or a subreddit like "https://www.reddit.com/r/technology"), Total_Comments (e.g., 10 for the first 10 comments per post), and max loop items (e.g., 10 posts). Use defaults for testing.

- Run the Workflow: Click "Start" to navigate the page, loop through posts, click titles for comments, and extract data.

- Export Data: Download results in CSV, JSON, XML, or Markdown format.

This is the simplest way to scrape Reddit in just one click! But if you're interested, you can also build your own scraping workflow with BrowserAct from scratch for even more customization.

What Data Can You Extract?

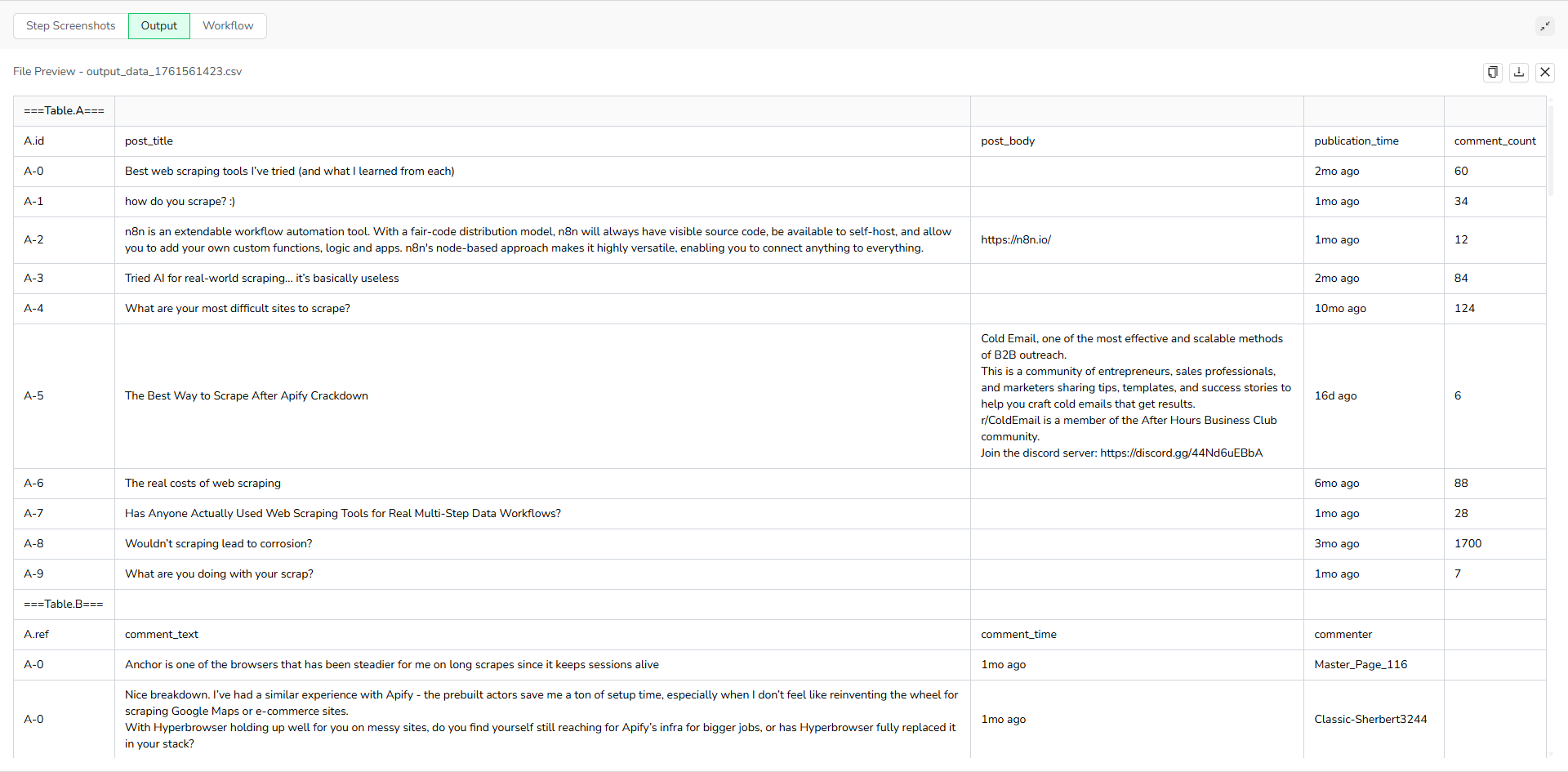

BrowserAct's Reddit Scraper allows you to pull a wide range of publicly available data for analysis. The pre-built template comes with fixed extraction fields for common needs, such as:

- Reddit Posts: Post titles, body text, publication times, and comment counts.

- Reddit Comments: Individual comment threads, commenter details (e.g., usernames), and timestamps.

That said, the tool fully supports customization—you can adjust or add fields yourself by modifying the "Extract Data" nodes in the workflow (e.g., including upvotes, URLs, or other metadata). This flexibility lets you tailor the scraper to your specific project while preserving hierarchical structures for post-comment relationships. Outputs are available in formats like JSON, CSV, XML, or Markdown for easy integration.

Pros

- No coding needed—perfect for beginners and non-technical users.

- Fast and scalable for handling large datasets efficiently.

- Handles anti-scraping measures with built-in rate limits and automation integration (e.g., Make.com, n8n), enabling scheduled scraping, automated links, and direct data transfer to tools like Google Sheets.

- Free to use with trials, daily login for free credits, and lifetime deals (e.g., on AppSumo—pay once, use forever).

- Accurate and reliable data extraction, using 'N/A' for missing fields and automatic page loading for consistent results.

- Customizable and flexible, with hierarchical output to maintain post-comment relationships.

- Built-in IPs that avoid being blocked by network security.

- Automatically handles CAPTCHA verification, with no need to log in to a Reddit account, reducing the risk of bans.

Cons

- Dependency on the tool, so updates or downtime could impact your workflow.

Ready to try it? Sign up for BrowserAct's free trial and start scraping Reddit today!

Method 2: Using Reddit's Official API

This is a foundational way to scrape Reddit without third-party Reddit scrapers. This method involves accessing data directly via Reddit's JSON API endpoints (e.g., reddit.com/r/subreddit.json), making it an official and free approach to extract public information like posts, comments, and metadata using simple HTTP requests.

Steps to Scrape Reddit with Reddit's Official API

Follow this quick start guide on scraping Reddit using the official API:

- Register for API Access: Create a Reddit account, then register an app at reddit.com/prefs/apps to get your client ID, secret, and user agent for OAuth.

- Handle Authentication: Use OAuth to obtain an access token (e.g., via a POST request to reddit.com/api/v1/access_token).

- Make HTTP Requests: Use a tool like Python's requests library to send GET requests to endpoints (e.g., GET https://oauth.reddit.com/r/technology/hot?limit=10).

- Parse the Data: Extract and process the JSON response, handling pagination if needed (e.g., using the 'after' parameter for more results).

- Export Data: Save the parsed data to formats like CSV or JSON for analysis.

For advanced builds, integrate with libraries like PRAW (Python Reddit API Wrapper) to simplify authentication and querying.

Pros

- Official and reliable, directly from Reddit's servers for accurate data.

- Free to use with no costs beyond your development time.

- No risk of bans if you respect rate limits (e.g., 60 requests per minute) and terms of service.

- Scalable for large datasets with proper handling of pagination and authentication.

- Data is accurate and structured in JSON, making it easy to integrate with other tools or scripts.

- Supports public data extraction without anti-scraping issues, as it's an approved method.

Cons

- Limited to public data only—no access to private subreddits or deleted content.

- Requires coding knowledge (e.g., basic Python or HTTP requests) to implement effectively.

- Rate limits can slow down large-scale scraping if not managed properly.

Method 3: Python Libraries Like PRAW (Programmatic Reddit Scraper)

PRAW is one of the best free Reddit scrapers for developers learning how to scrape Reddit. This method uses PRAW (Python Reddit API Wrapper) for easy scripting, allowing you to access Reddit's API programmatically to extract data like posts, comments, and metadata without dealing with raw HTTP requests.

Steps to Scrape Reddit Data with PRAW

Follow this quick start guide to scrape Reddit using PRAW:

- Install PRAW: Run pip install praw in your terminal to add the library to your Python environment.

- Authenticate: Create a Reddit app at reddit.com/prefs/apps to get your client ID, secret, and user agent, then initialize PRAW with reddit = praw.Reddit(client_id='YOUR_ID', client_secret='YOUR_SECRET', user_agent='YOUR_AGENT').

- Fetch Data: Use methods like subreddit = reddit.subreddit('technology') and for submission in subreddit.top(limit=100): to retrieve posts, or expand to comments with submission.comments.

- Handle Pagination: PRAW automatically manages limits and 'after' parameters for fetching more results.

- Export Data: Parse the data into a list or dictionary and save it (e.g., to CSV using pandas or built-in CSV module).

For advanced builds, combine with libraries like pandas for data processing or schedule runs with cron jobs.

This is an example Python script to scrape the top 10 posts from r/technology:

python

Run

import praw

# Authenticate

reddit = praw.Reddit(client_id='YOUR_CLIENT_ID',

client_secret='YOUR_CLIENT_SECRET',

user_agent='YOUR_USER_AGENT')

# Fetch data

subreddit = reddit.subreddit('technology')

for submission in subreddit.top(limit=10):

print(f"Title: {submission.title}")

print(f"Score: {submission.score}")

print(f"Comments: {submission.num_comments}")

# Add more fields or export to file as needed

This script automates extracting key post details, which you can expand for comments or other subreddits.

Pros

- Free to use with no costs, as it's an open-source library relying on Reddit's free API.

- Highly customizable, allowing you to script complex queries, filters, and data manipulations.

- Handles pagination automatically, making it easy to scrape large volumes without manual effort.

- Accurate and reliable, pulling data directly from Reddit's API with structured objects for easy access.

- Scalable for developers, integrating well with other Python tools like pandas or SQL for analysis.

- Reduces boilerplate code compared to raw API calls, speeding up development.

Cons

- Requires Python knowledge and setup, which can be a barrier for non-programmers.

- Subject to Reddit's API limits (e.g., 60 requests per minute), potentially slowing large-scale scraping.

- Limited to public data and API-available features, with no access to private or real-time elements without workarounds.

Method 4: Web Scraping with BeautifulSoup or Scrapy

For those wondering how to scrape Reddit without APIs, this Reddit scraper method is ideal for static content. This approach involves parsing Reddit's HTML structure directly using Python libraries like BeautifulSoup for simple static pages or Scrapy for more advanced crawling, allowing you to extract non-API data by targeting CSS selectors for elements like posts and comments.

Steps to Scrape Reddit with BeautifulSoup or Scrapy

Follow this quick start guide on web scraping using BeautifulSoup or Scrapy:

- Install the Libraries: Run pip install beautifulsoup4 requests for BeautifulSoup, or pip install scrapy for Scrapy, in your Python environment.

- Fetch the Page: Use requests to get the HTML (e.g., response = requests.get('https://www.reddit.com/r/technology')), or set up a Scrapy spider to crawl the URL.

- Parse the HTML: With BeautifulSoup, create a soup object (soup = BeautifulSoup(response.text, 'html.parser')) and target selectors (e.g., posts = soup.find_all('div', class_='thing')); with Scrapy, define items and use XPath/CSS selectors in your spider.

- Extract Data: Loop through elements to pull posts/comments (e.g., title = post.find('a', class_='title').text), handling pagination by following 'next' links.

- Export Data: Store extracted data in a list or dictionary and save it (e.g., to CSV using csv module or Scrapy's built-in exporters).

For advanced builds, integrate Selenium for dynamic JavaScript rendering or use Scrapy's middleware for proxies to avoid anti-bot detection.

Pros

- Highly flexible for custom needs, allowing precise targeting of any HTML elements.

- Works around API limits by directly scraping the site, accessing data not available via official endpoints.

- Free to use with open-source libraries, no costs beyond your setup.

- Customizable, with easy adjustments to selectors, filters, and output formats.

- Scalable for complex crawls (especially with Scrapy), handling multiple pages and subreddits efficiently.

- Accurate for static content, pulling real-time rendered data from the page source.

Cons

- Brittle to site changes, as Reddit's HTML/CSS updates can break selectors.

- May trigger anti-bot measures like CAPTCHAs or IP bans if not using delays or proxies.

- Requires coding knowledge (Python and HTML understanding) to implement and maintain.

Method 5: Browser Automation with Selenium

Selenium acts as a dynamic Reddit scraper for advanced Reddit web scraping scenarios. This method simulates real browser interactions to handle JavaScript-heavy pages on Reddit, allowing you to automate navigation, scrolling, and extraction of dynamic content like infinite-scroll posts and comments that aren't easily accessible via static HTML parsing.

Steps to Scrape Reddit with Selenium

Follow this quick start guide to scrape data using Selenium:

- Install Selenium: Run pip install selenium and download a WebDriver (e.g., ChromeDriver) matching your browser version.

- Set Up the Browser: Initialize the driver (e.g., from selenium import webdriver; driver = webdriver.Chrome()).

- Navigate to Pages: Use driver.get('https://www.reddit.com/r/technology') to load the subreddit, then simulate actions like scrolling (driver.execute_script('window.scrollTo(0, document.body.scrollHeight);')) for infinite loading.

- Extract Elements: Use XPath or CSS selectors to find data (e.g., titles = driver.find_elements(By.XPATH, '//h3')), loop through posts, click to expand comments, and pull details.

- Export Data: Collect data in lists or dictionaries and save it (e.g., to CSV using pandas), then close the driver (driver.quit()).

For advanced builds, add waits (e.g., WebDriverWait) for elements to load or use headless mode to reduce visibility and ban risks.

Pros

- Handles infinite scrolling and dynamic content, loading JavaScript-rendered elements seamlessly.

- Supports logins and interactive features, like expanding threads or navigating private subreddits (with credentials).

- Free to use with open-source libraries, no costs beyond setup.

- Highly customizable, allowing scripted actions, waits, and multi-page navigation for complex scenarios.

- Accurate for real-time data, as it mimics user behavior to access fully rendered pages.

- Scalable when combined with proxies or cloud services to manage multiple sessions.

Cons

- Slower performance due to browser simulation, especially for large-scale scraping.

- Resource-intensive, requiring more CPU/memory and potentially a visible browser window.

- Higher risk of bans from anti-bot detection, as it can appear suspicious without proper delays or IP rotation.

- Requires coding knowledge (Python and XPath/CSS) and WebDriver management.

Comparison of 5 Effective Reddit Scraping Methods

Method | Ease of Use | Coding Required? | Cost | Key Pros | Key Cons | Best For |

1. BrowserAct Reddit Scraper | High (no-code) | No | Free trial | No coding, fast, handles anti-scraping, customizable | Potential costs, tool dependency | Beginners or quick, automated setups without coding |

2. Reddit's Official API | Moderate (requires setup) | Yes (basic HTTP/JSON) | Free | Official, reliable, no ban risk if rate-limited | Limited to public data, requires coding | Developers needing structured, compliant data extraction |

3. Python Libraries Like PRAW | Moderate to High (for Python users) | Yes (Python scripting) | Free | Customizable, handles pagination, free | Python knowledge needed, API limits apply | Programmers building automated, scalable scripts |

4. Web Scraping with BeautifulSoup or Scrapy | Moderate (HTML knowledge helpful) | Yes (Python coding) | Free | Flexible for custom needs, bypasses API limits | Brittle to site changes, anti-bot risks | Custom parsing of static HTML without API restrictions |

5. Browser Automation with Selenium | Low to Moderate (setup intensive) | Yes (Python scripting) | Free | Handles dynamic content, infinite scrolling | Slower, resource-heavy, higher ban risk | Scraping JavaScript-loaded or interactive pages |

This comparison table breaks down the 5 methods for how to scrape Reddit, helping you choose the best Reddit scraper based on your skills, budget, and needs. If you're a beginner avoiding code, start with no-code tools like BrowserAct Reddit Scraper for simplicity and speed. For developers, options like PRAW or Selenium offer more control but require technical know-how—always prioritize ethical practices and Reddit's terms to avoid issues.

Conclusion

We've covered five powerful methods for how to scrape Reddit, from no-code tools to advanced scripting, each unlocking the platform's data for analysis, research, or marketing insights. Pick the one that best fits your skills and goals to get started efficiently.

- For Beginners: Go with BrowserAct's no-code Reddit scraper—it's fast, customizable, and handles everything from setup to exports without programming.

- For Official Access: Developers preferring free, reliable JSON data with minimal risk should use Reddit's API.

- For Python Fans: Leverage PRAW for easy automation and pagination handling.

- For Custom Parsing: Opt for BeautifulSoup or Scrapy to bypass API limits with flexible HTML extraction.

- For Dynamic Content: Use Selenium's browser simulation for infinite scrolling and interactive pages, though it requires more setup.

For most users, starting with BrowserAct or Reddit's Official API provides a strong balance of ease, compliance, and effectiveness.

Whether you're a data analyst or marketer, mastering how to scrape Reddit with the right Reddit scraper can supercharge your projects.

Try BrowserAct's Reddit scraper today for scraping Reddit in one click!

Relative Resources

BrowserAct Creator Program — Phase 1 Featured Workflows & Rewards

How to Choose a Reliable Web Scraper for Massive Requests

Top 10 Phone Number Extractors for 2026

Amazon Black Friday 2025 Guide for Sellers: How to Win

Latest Resources

Moltbook: Where 150K AI Agents Talk Behind Our Backs

How to Bypass CAPTCHA in 2026: Complete Guide & Solutions

Moltbot (Clawdbot) Security Guide for Self-Hosted AI Setup