How to Choose a Reliable Web Scraper for Massive Requests

Scraping millions of requests? Learn how to choose the right web scraping provider with clear KPIs, pilots, proxy rotation, rendering, and SLAs.

Selecting the right web scraping provider for enterprise-scale operations isn't about finding a universal "best" solution—it's about identifying the platform that reliably delivers your specific data requirements at scale, within budget, while maintaining compliance and meeting SLA commitments.

When you're processing millions of requests, you need infrastructure that seamlessly integrates deep proxy rotation, JavaScript rendering, sophisticated anti-bot evasion, and comprehensive observability. Success starts with precise scoping, measurable benchmarks, and rigorous vendor validation through time-boxed pilots.

Industry analyses consistently emphasize that proxy pool depth, rendering engine quality, and pricing transparency are the core capabilities that directly impact performance at scale.

1. Define Your Data Needs and Volume

Begin by writing down exactly what you want to collect and how often. Clarity here drives technical fit, cost predictability, and a clean pilot.

Essential specifications:

- Specify page types: category listings, product details, reviews, pricing, job posts.

- Note JavaScript complexity: dynamic (requires rendering) vs. static (HTML-first).

- Estimate concurrency and volume: URLs per day, peak requests per second.

- Include geographic-targeting needs: country/city targeting for localized content.

- Define freshness and latency: how “fresh” the data must be and acceptable response times.

- Add any timing windows, filters (e.g., only in-stock), and data enrichment rules.

Web scraping is the automated extraction of structured data from websites, often requiring headless browser rendering and countermeasures to navigate anti-bot systems.

Sample requirements framework:

Dimension | Specification |

Target sites | E-commerce product pages, competitor pricing |

Volume | 2M URLs/day, 50 req/sec peak |

JavaScript | Heavy (React/Vue SPAs) |

Geographic scope | US, UK, DE city-level |

Freshness | 6-hour max lag |

Success target | >95% |

If you’re new to scoping at scale, vendor guides such as Bright Data’s services overview provide helpful framing on common use cases, geo-targeting, and rendering considerations.

2. Set Clear Success Metrics and Budget

Predefine how you’ll measure success, then hold every vendor to the same yardstick. This makes pilots comparable and negotiations straightforward.

- Throughput: requests per second and total requests per day.

- Data quality: field-level accuracy and completeness.

- Reliability: success/failure rate, automatic retries, and block handling.

- Latency: per-request targets and percentile SLAs (p95/p99).

- Cost ceiling: monthly/annual budget and overage policies.

Example pilot targets:

Metric | Target Value |

Requests/day | 10,000 |

Success rate | 98% |

Allowable errors | <2% |

Max latency | <5s per request |

Monthly budget | $2,000 |

Budget for more than API calls. At scale, costs accrue from residential or mobile proxies, headless browser rendering, bandwidth/egress, CAPTCHA solving, storage, and monitoring. Clear metrics and a capped budget streamline proof-of-concept testing, make vendor comparisons objective, and formalize accountability in the SLA.

3. Evaluate Technical Capabilities and Scalability

At high volume, reliability hinges on a provider’s architecture and how well it neutralizes block vectors. Shortlist vendors by these pillars:

- Network and IP diversity: depth in residential/mobile pools, country/city coverage, ASN diversity, and session control.

- JavaScript rendering: modern headless engines, HTTP/2/3 support, resource controls, and smart waits for dynamic content.

- Anti-bot resilience: evasion of WAF/CDN challenges, CAPTCHA solving, fingerprinting strategies, and adaptive throttling.

- Orchestration at scale: concurrency controls, autoscaling, queueing, backoff policies, and idempotent retries.

- Observability: per-request logs, block codes, HAR/screenshot capture, metrics (success, block, and latency by target/region).

- Data quality controls: schema validation, deduplication, change detection, and alerting.

- Compliance and security: consented IP sourcing, KYC for residential networks, DPA/SCC support, data retention controls, and auditability.

- Support and SLA: clear uptime/latency SLAs, incident response, named support channels, and migration assistance.

Industry research consistently shows that proxy/IP breadth, JavaScript rendering capability, and anti-bot resilience are the decisive factors for massive workloads. Modern API platforms that combine rendering, rotation, and anti-bot mitigation in a single endpoint dramatically reduce engineering overhead compared to managing multiple services.

4. Proxy and IP Rotation Strategies

For high-volume scraping, a robust proxy strategy is non-negotiable. Rotation reduces detection, evens out rate limits, and keeps success rates high.

Proxy Type Selection

- Datacenter proxies: fastest and cheapest; best for tolerant targets and non-sensitive assets.

- Residential proxies: more resilient to blocks; needed for retail, travel, classifieds, and geo-sensitive sites.

- Mobile proxies: highest trust, most expensive; reserved for the hardest targets or niche geos.

Rotation and Session Management

- Hybrid session strategy: Deploy sticky IPs for stateful flows (cart/checkout, paginated navigation); rotate aggressively on listing pages and search results

- Domain-specific tuning: Customize rotation frequency per domain and URL path; implement jitter combined with adaptive backoff when encountering 429 or 5xx responses

- Performance-based optimization: Track success/block rates segmented by ASN, country, and ISP; automatically redistribute traffic to higher-performing IP pools

Geographic Targeting and Pool Quality

- Ensure city/country coverage matches your data needs and content localization.

- Ask about pool size, daily unique IP throughput, and ASN distribution to avoid “thin” pools that burn quickly.

Bot Detection and CAPTCHA Management

- Prefer providers with built-in CAPTCHA solving and challenge handling to minimize custom logic.

- Monitor fingerprint-based blocks; advanced rotation should include header/device/fingerprint variance alongside IP changes.

Critical Vendor Due Diligence Questions

- What is the effective unique IP throughput per day in target countries?

- Do you support sticky sessions with TTL control and per-request rotation policies?

- How do you source residential/mobile IPs and enforce compliance/KYC?

- What observability is provided (block reason taxonomy, HARs, screenshots)?

- What are typical success and latency profiles on JS-heavy sites?

Leading web scraping platforms consistently offer automatic proxy rotation, integrated CAPTCHA solving, and country-level targeting as baseline requirements for resilient, scalable operations. When evaluating providers, focus on selecting the optimal proxy mix for your specific workload and budget constraints, particularly when graduating from datacenter to residential/mobile infrastructure.

Final Recommendations

- Pilot before committing: Run time-boxed evaluations (2-4 weeks) with representative workloads on your actual target sites.

- Start narrow, then scale: Begin with a focused use case to validate core capabilities before expanding scope.

- Monitor continuously: Implement real-time alerting on success rates, latency, and cost overruns from day one.

- Plan for compliance: Ensure your provider's IP sourcing and data handling practices align with your legal and ethical requirements.

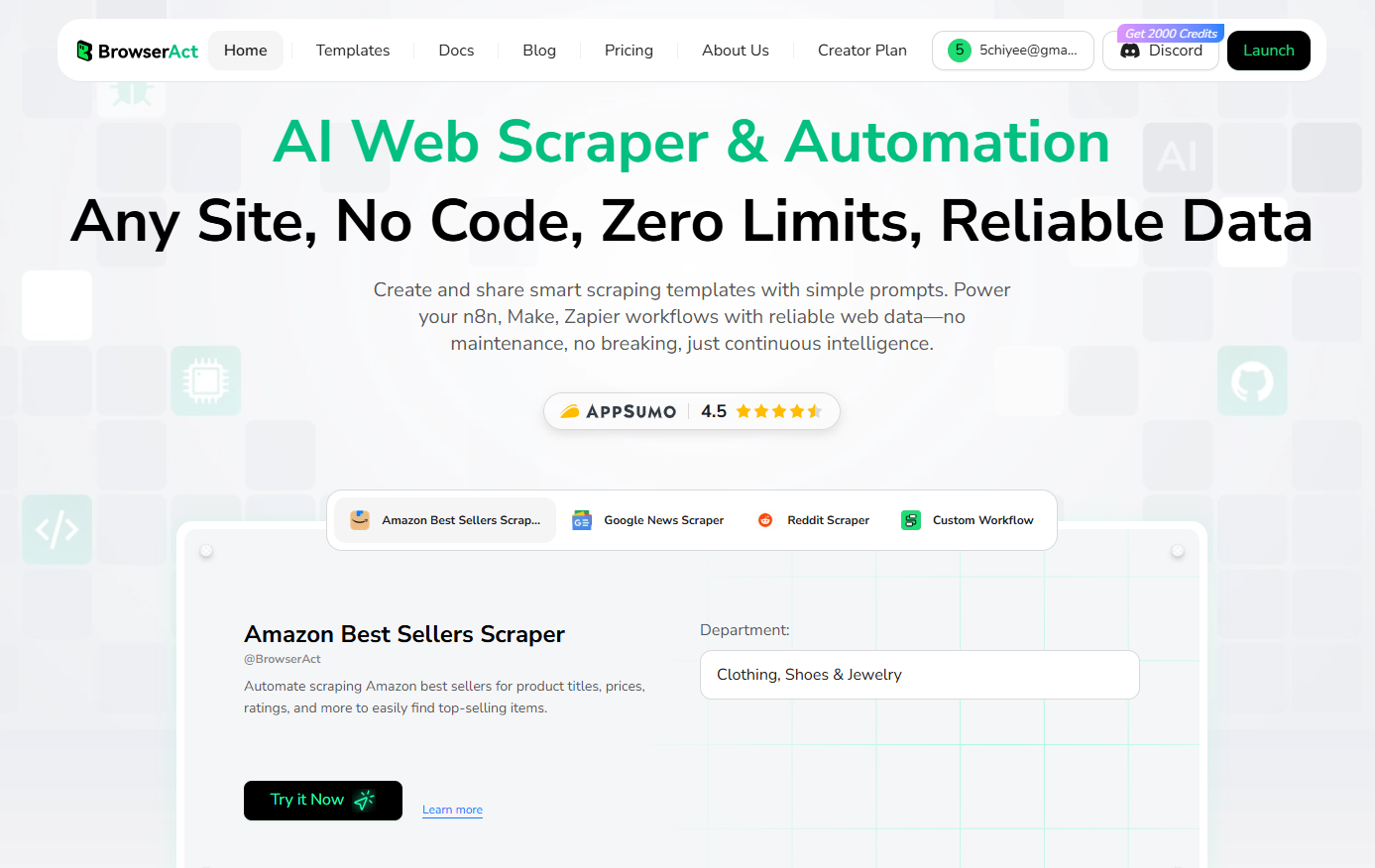

Why Teams Choose BrowserAct for High-Volume Scraping

BrowserAct is an AI-driven, no-code browser automation platform that consolidates all the technical capabilities discussed above—residential/datacenter proxy rotation, JavaScript rendering, anti-bot evasion, CAPTCHA solving, and observability—into a single intelligent endpoint. Instead of integrating and managing multiple services, your team gets enterprise-grade scraping with zero custom code. Setup takes minutes: configure your targets, set your volume requirements, and BrowserAct automatically handles session management, fingerprint randomization, block detection, and adaptive retries.

The platform seamlessly integrates with automation tools like n8n and Make, allowing you to build complete data pipelines without writing code. With built-in MCP (Model Context Protocol) support, BrowserAct connects directly to AI agents and LLM workflows, enabling intelligent data extraction and processing at scale. Pricing is transparent and linear as you scale from thousands to millions of requests—no surprise proxy overages, rendering fees, or hidden costs. Your engineering team eliminates infrastructure maintenance and focuses on what matters: turning scraped data into business value.

Ready to see BrowserAct in action? Get started with 100 free credits daily. Test BrowserAct on your most challenging targets and validate it against the success metrics from this guide: throughput, data quality, reliability, and cost per request. After that, simply pay as you go with transparent per-request pricing that scales with your needs.

Ready to see BrowserAct in action? Get started with 100 free credits daily. Test BrowserAct on your most challenging targets and validate it against the success metrics from this guide: throughput, data quality, reliability, and cost per request. After that, simply pay as you go with transparent per-request pricing that scales with your needs.

Relative Resources

Web Scraping API vs DIY: How to Choose the Right One in 2026

How to Bypass CAPTCHA in 2026: Complete Guide & Solutions

BrowserAct Creator Program — Phase 1 Featured Workflows & Rewards

Top 10 Phone Number Extractors for 2026

Latest Resources

10 Killer AI Agent Skills That Are Dominating GitHub Now

How One Person Made $100K in 3 Days Selling OpenClaw Setups

Amazon Price Scraper: Monitor Competitor Pricing in Real-Time | No Coding Required