Get free user reviews on Expedia without buying a search interface!

Expedia is a leading global online travel services company founded in 1996 and headquartered in Seattle, Washington, USA. Its main business is to provide consumers and businesses with comprehensive travel services through the Internet platform, including air tickets, hotel reservations, car rental services, vacation packages, cruise bookings, and travel activities.

Expedia has a large user base and traffic, and many consumers go through the user reviews and opinions on Expedia to organize their trips. Many companies in the travel industry use Expedia's data to analyze and develop different strategies to ensure better service.

However, Expedia's public data does not provide a complete search interface, so users need to manually check the information and reviews of each hotel and vehicle, and then collect and filter them manually, which requires a lot of time, energy and cost.

Through several days' attempts, I have completed a free Expedia's hotel user reviews collection by using BrowserAct, now I share my steps and settings.

The tool I use for this is Browseract, an AI guided no code browser automation tool that allows me to build various data scrapers.

This is my final workflow page, my goal is to be able to enter a specific city, enter my filtering requirements for the rooms, and then within the room data I get, get the public user reviews.

1、Create and name a new workflow.

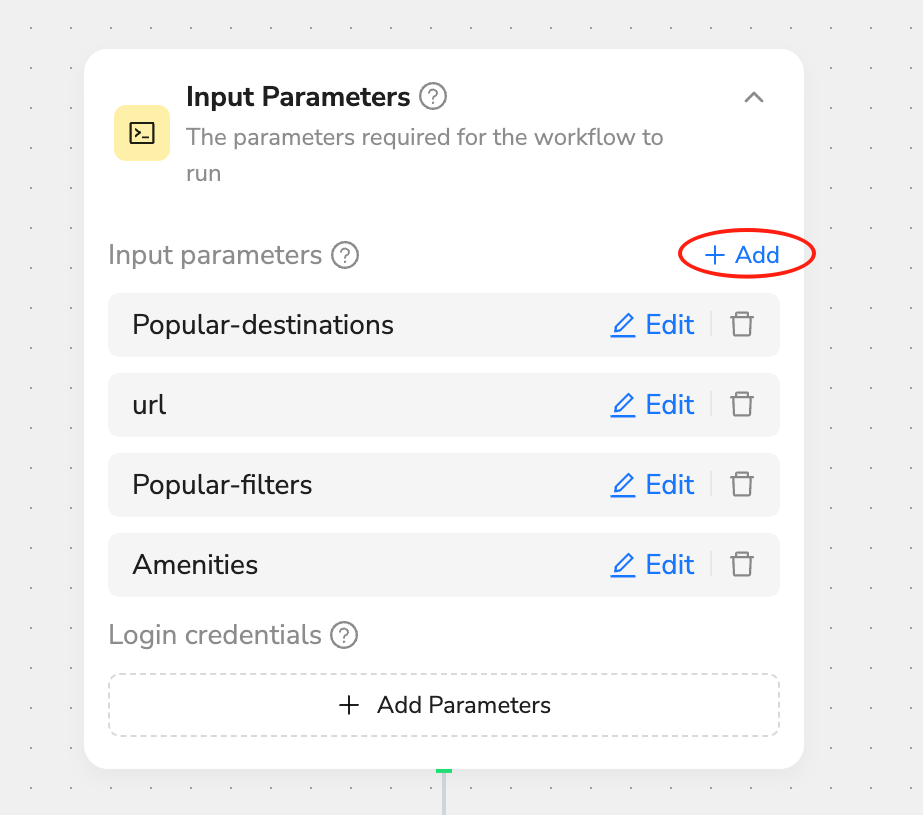

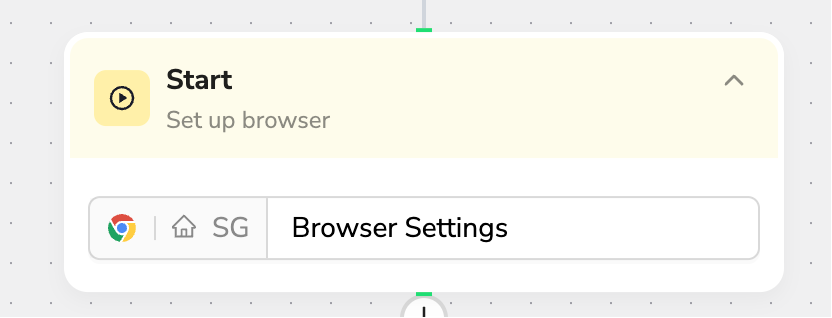

2、Start building the workflow by configuring the basic application nodes.

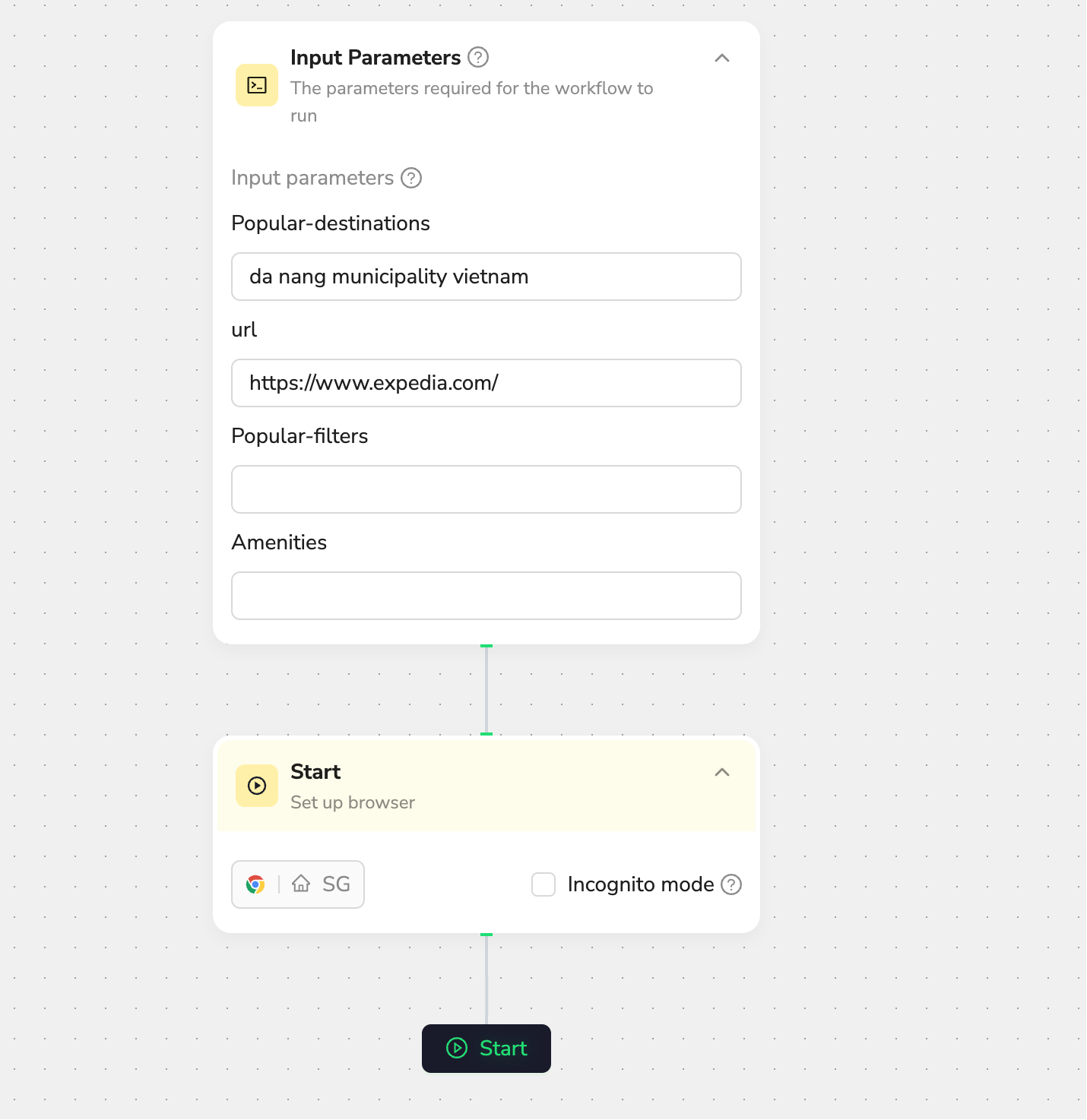

I can add different input parameters, with no upper limit, which allow me to capture different types of data as flexible inputs while the workflow is running.

Expedia does not require a login to access the data, I did not enter my account.

3、Start building the workflow.

I chose the Singapore node and I want to try to see if the data situation is consistent across different nodes.

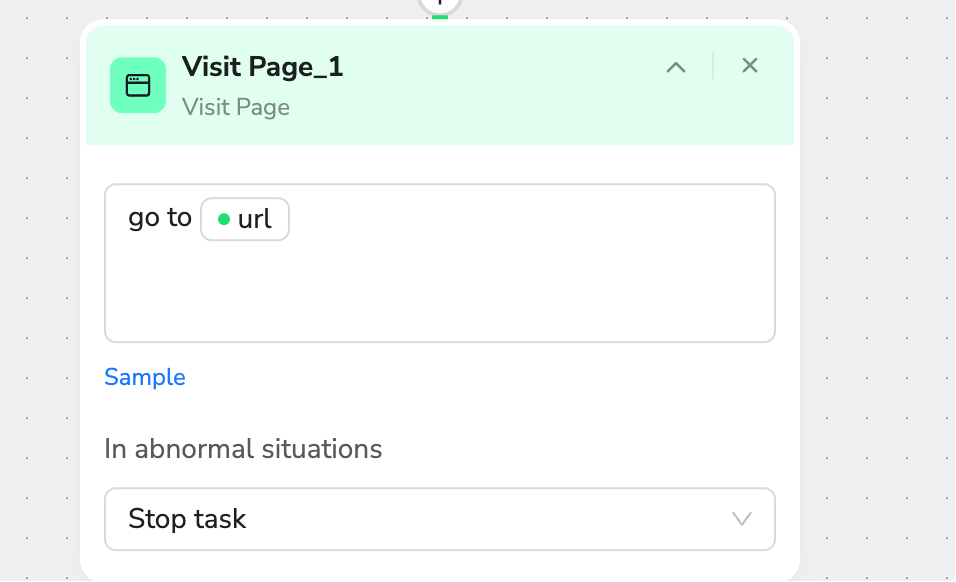

I will start by having the workflow automatically navigate to Expedia. This will minimize the wait time for the data collection step. I can directly reference the parameters I configured in step 1 to minimize manual input.

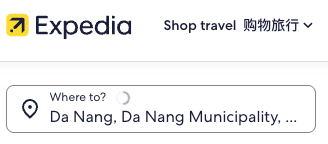

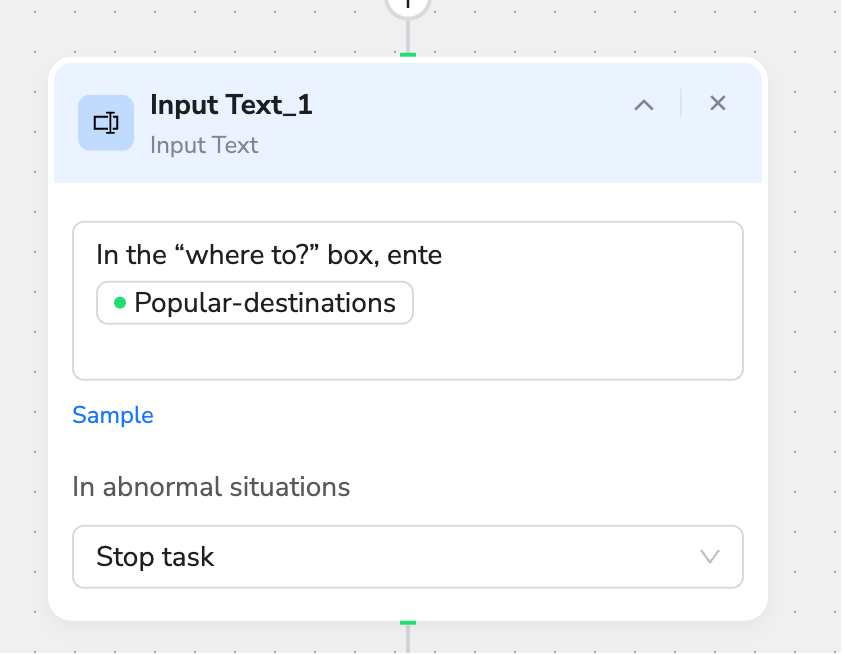

We utilize the input node and then let the input be made at the specified location in experdia, and the input can then reference the various keyword parameters I created at the beginning.

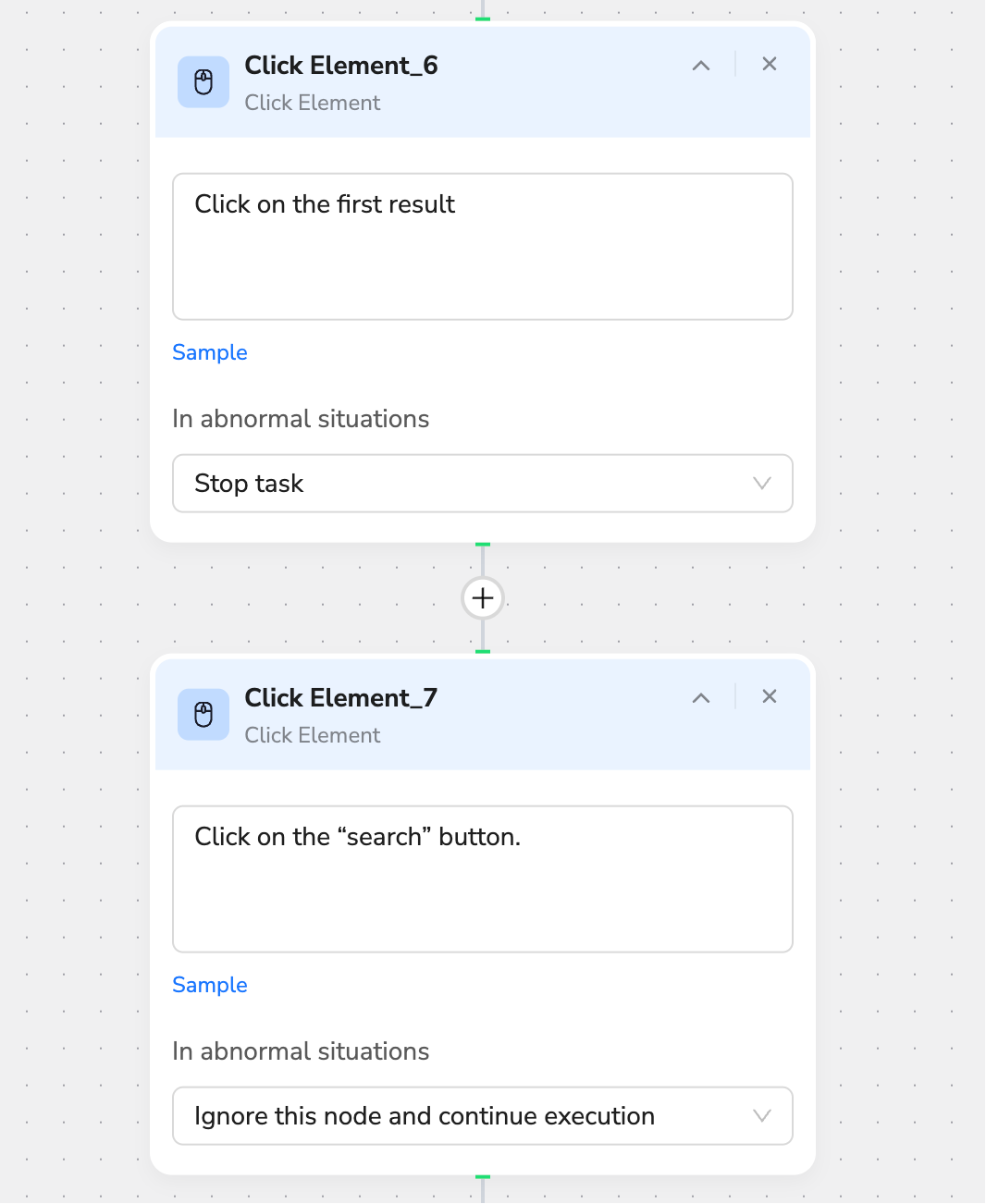

Then I used the click node and the search node to get him to expand the page after the search.

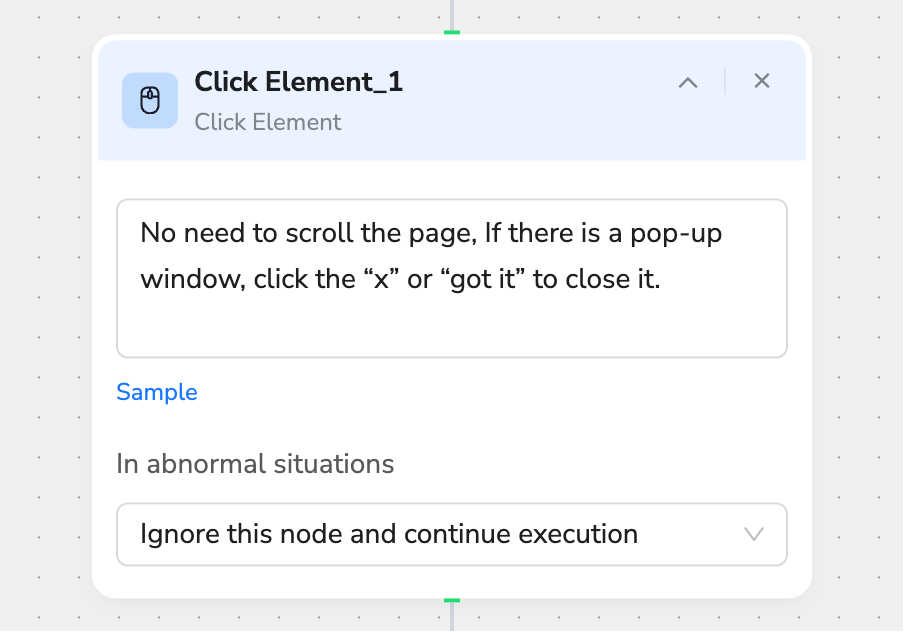

Here there is a node I like very much, I usually add this node, used to skip some pages suddenly appear pop-up ads or reminders, to reduce the trouble of data collection.

4、Utilization of special nodes

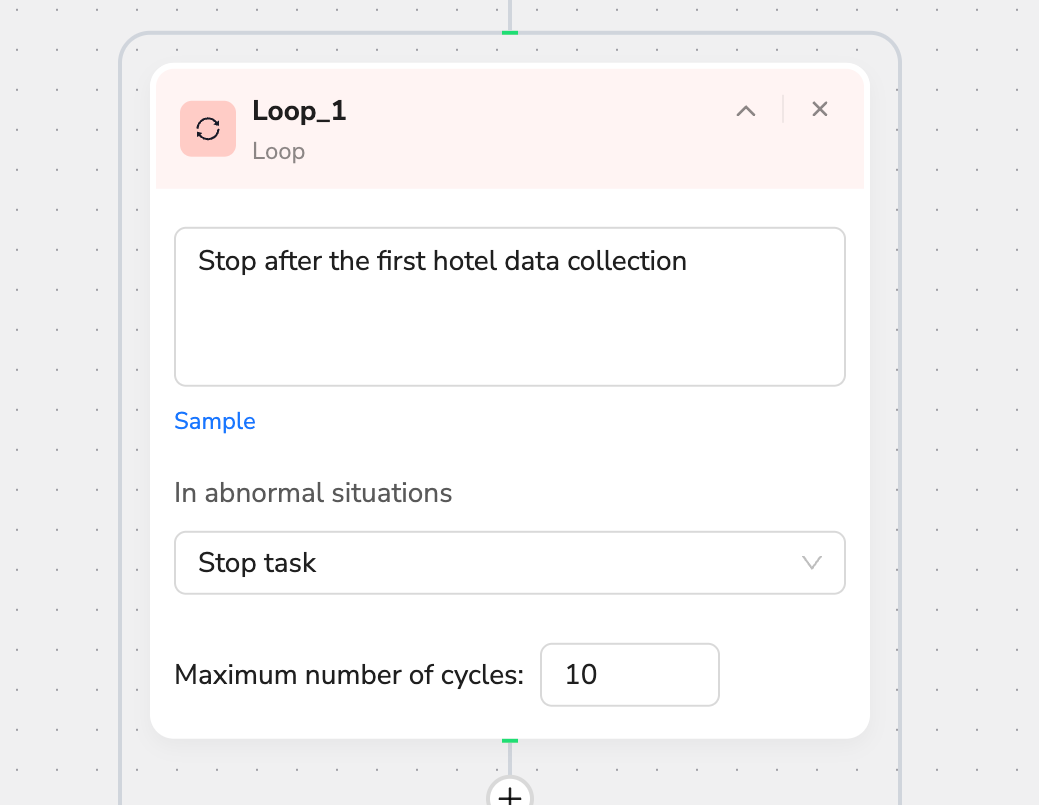

I used loops because of the large amount of data this time and the number of hotels. It helps me to scroll through repetitive tasks.

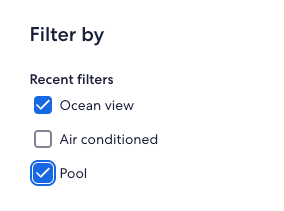

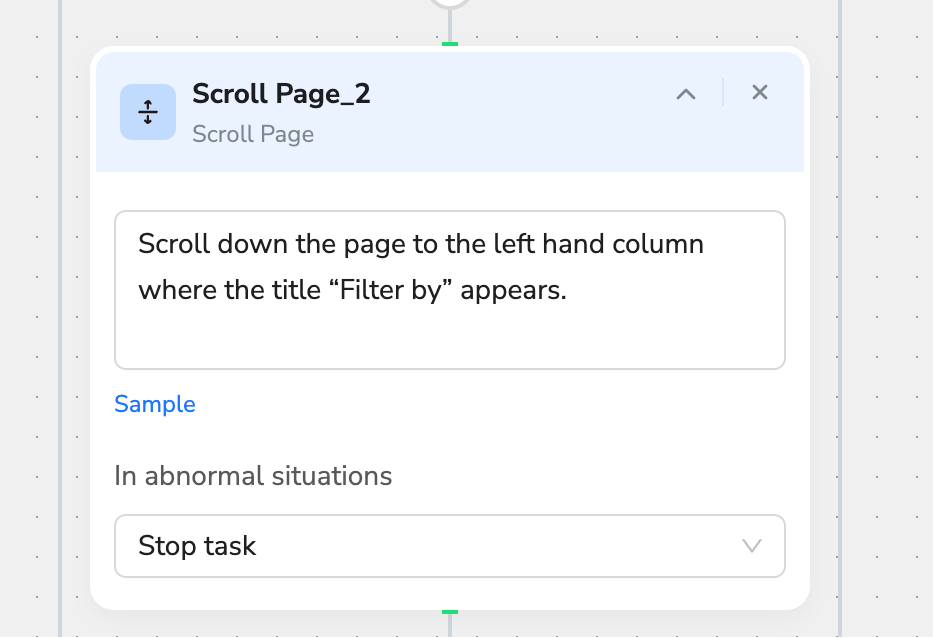

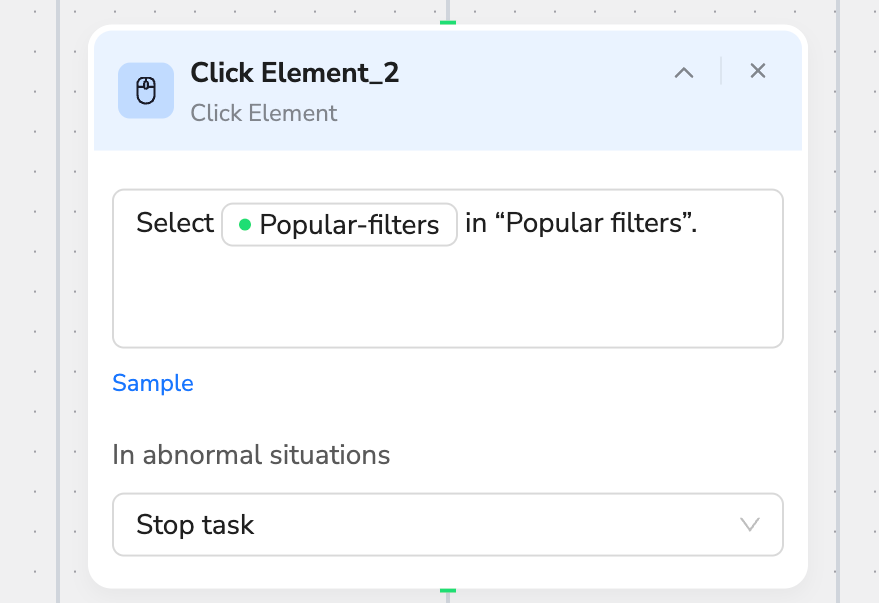

Here I let the page scroll to “Filter by” and look for and check the “Popular filters” I entered, a process I can easily describe in my own words.

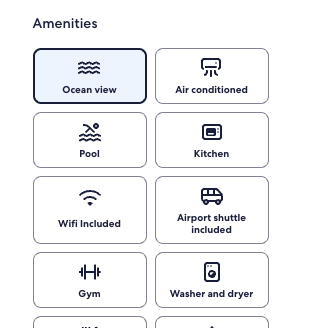

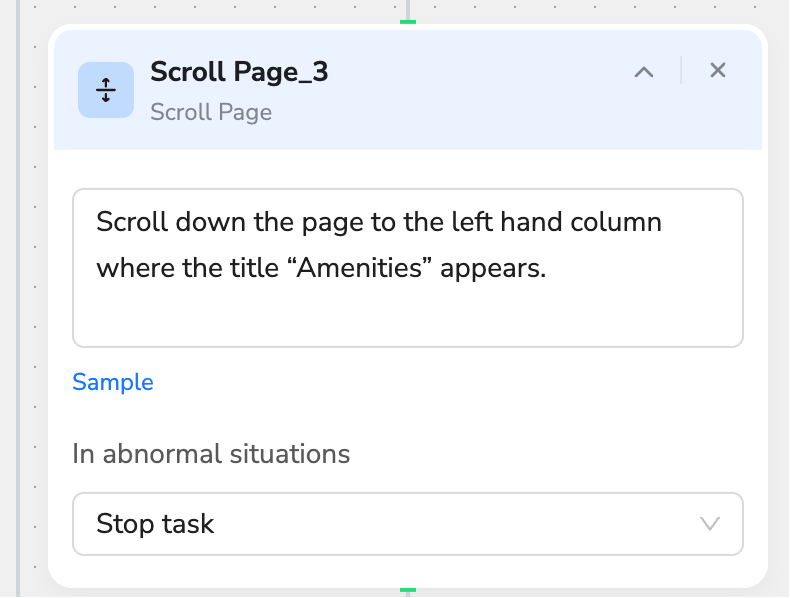

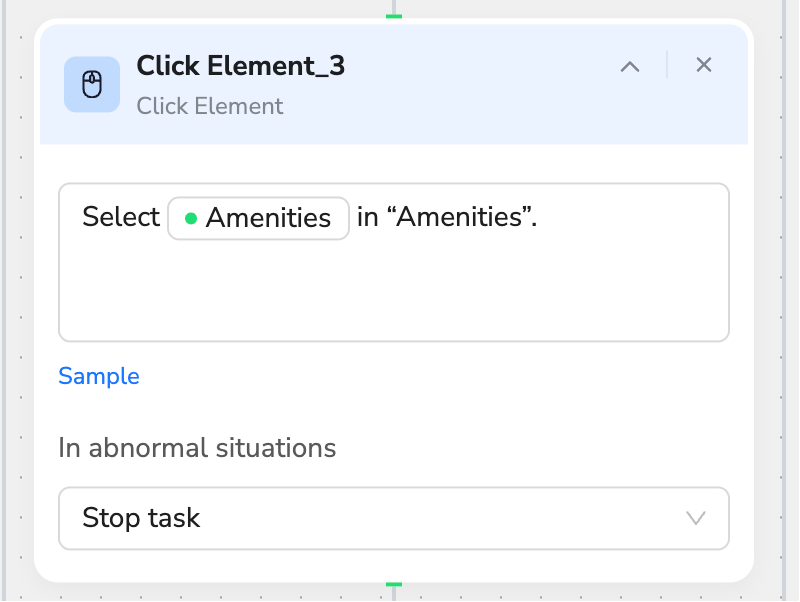

Similarly, “Amenities” uses a similar approach.Of course I can top more types too, just need me to add different keywords at the beginning.

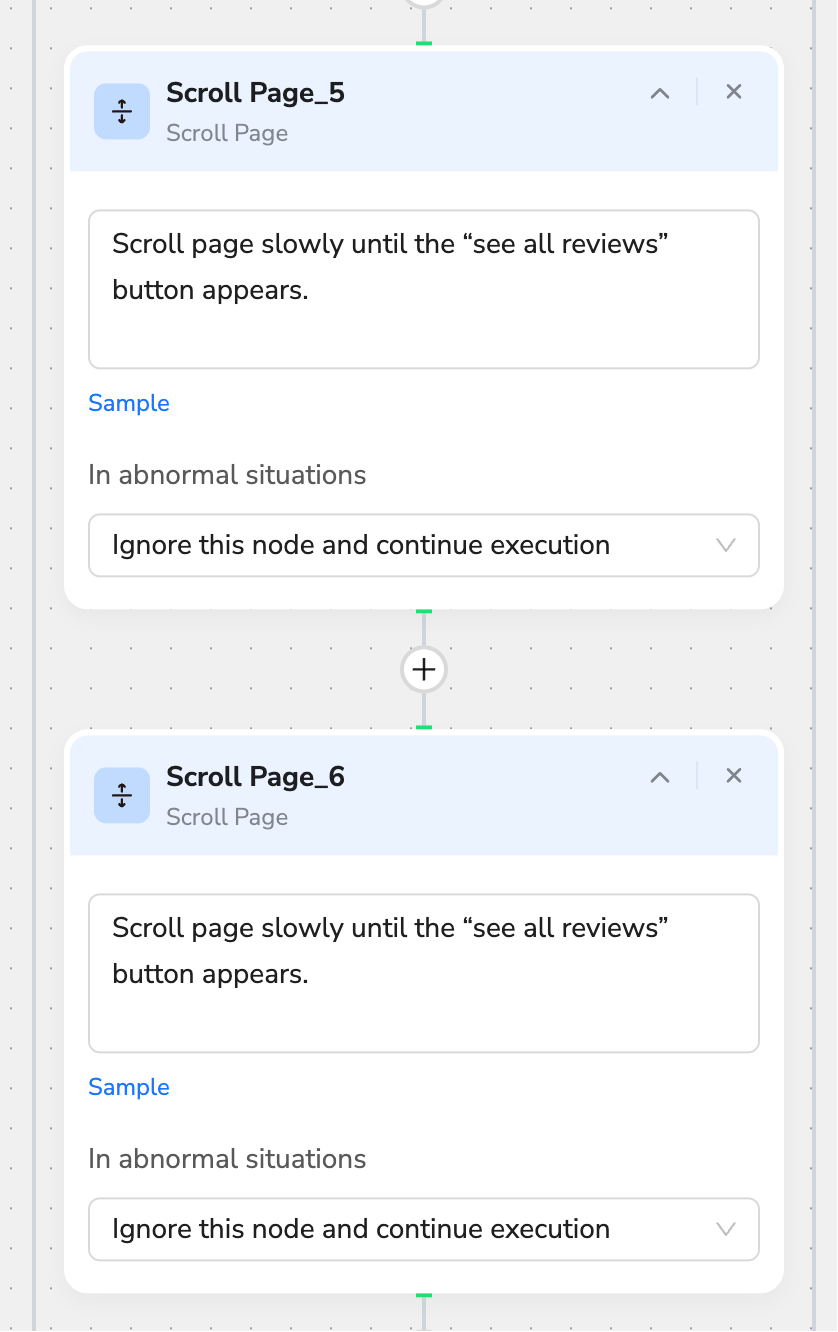

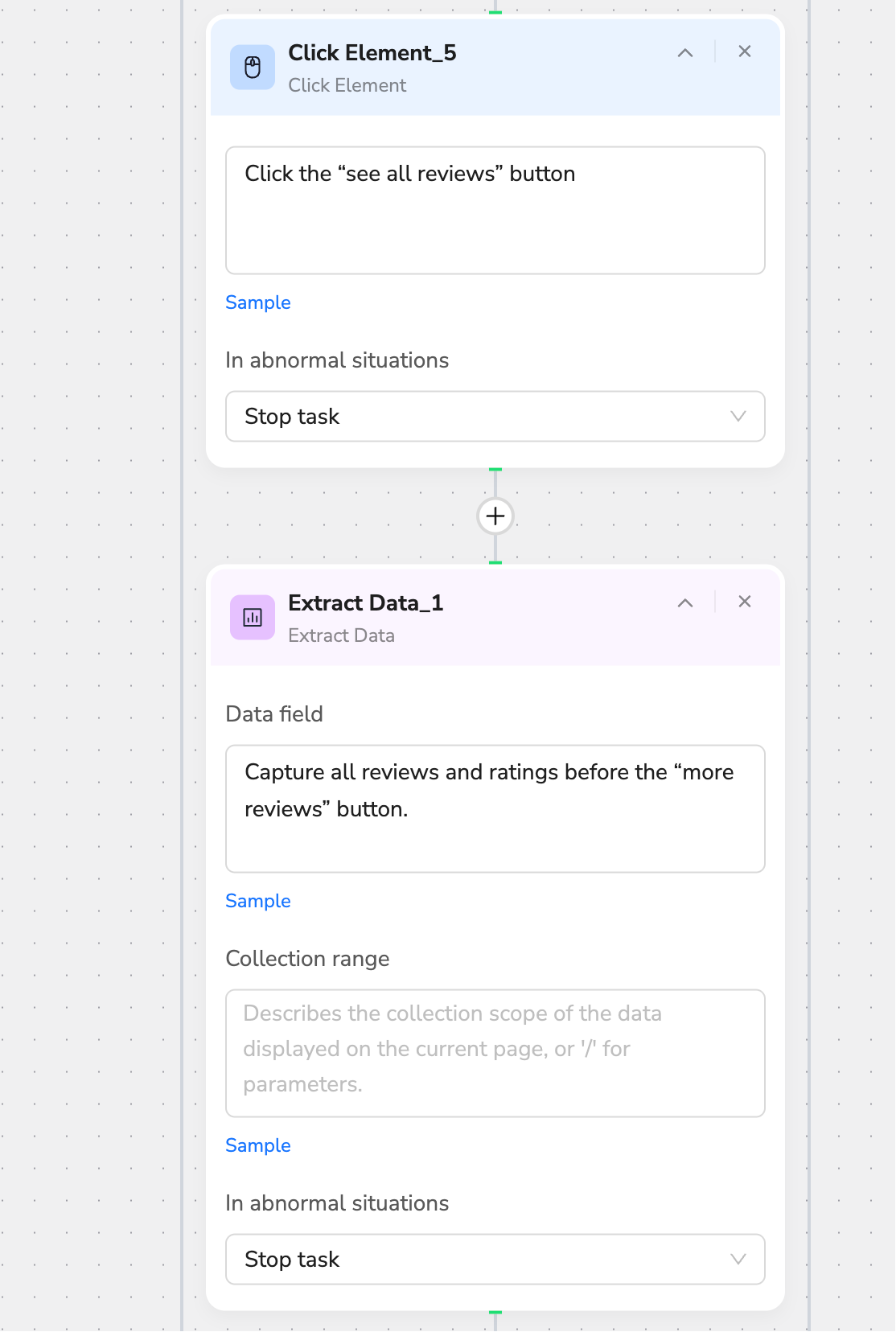

Finally, scroll through the page (I was worried that I wasn't scrolling enough, I added two scrolls) and click on the comment button to capture the comment data that can be seen publicly.

5、Extract data

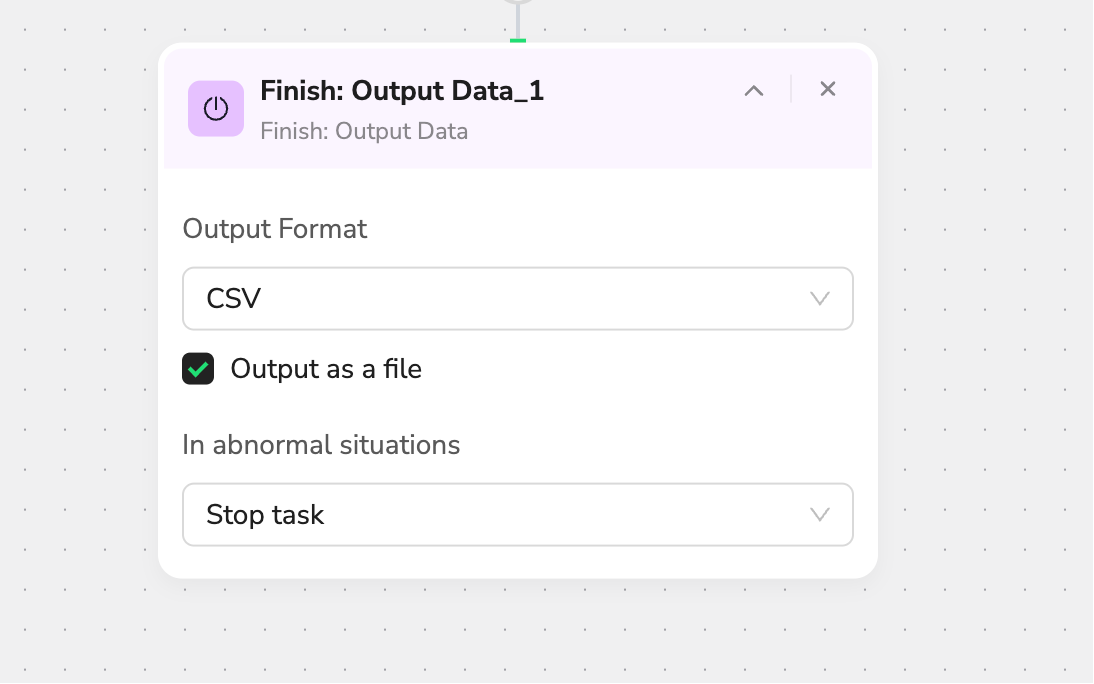

The loop did all the data duplication work for me, and I added a data type output requirement at the last node of the workflow. This gives me a CSV file.

And just like that, I get the CSV file I showed at the beginning, containing all the data I needed. I didn't use a single line of code. All I had to do was plan my scraping strategy and turn that plan into an executable, automated workflow. This has saved me a tremendous amount of work. Now I can use the latest data from Expedia to write my analysis, gather insights, and apply them to my work. Best of all, the entire process used virtually no credits.

BrowserAct can also be used with n8n. I will continue to configure its powerful and easy-to-orchestrate scraping capabilities. Stay tuned for my next results。

Relative Resources

Ecommerce Dynamic Pricing Monitoring Guide

Best High-Income Case Analysis: How to Achieve $25,000 Monthly Revenue with n8n

Instagram Scraping in 2025: Why I Recommend BrowserAct over Traditional APIs

How To Scrape LinkedIn Without Code Using BrowserAct (No More APIs or Python!)

Latest Resources

10 Killer AI Agent Skills That Are Dominating GitHub Now

How One Person Made $100K in 3 Days Selling OpenClaw Setups

Amazon Price Scraper: Monitor Competitor Pricing in Real-Time | No Coding Required