Reddit Scraper

Brief

What Does BrowserAct Reddit Scraper Do?

Automatically extract Reddit post data and associated comments with our powerful Reddit scraper tool. Capture post titles, body text, publication times, comment counts, individual comment threads, commenter details, and timestamps from any subreddit or search results page. Enjoy flexible filtering and output options for comprehensive discussion analysis—no coding required.

Our Reddit scraper is built for seamless integration with automation platforms like Make.com, making it ideal for ongoing monitoring and data extraction tasks.

Key Features of Reddit Scraper

- Customizable Parameters: Adjust Total_Comments to control extraction depth (e.g., 10, 20, or 50 per post).

- Flexible Post Selection: Set max loop items for 5, 10, or more posts from searches or subreddits.

- Dual-Level Extraction: Captures post metadata and comment threads for full context.

- Hierarchical Data Structure: Preserves post-comment relationships.

- Search & Subreddit Support: Compatible with searches, subreddits, and trending pages.

- No-Code & Free to Use: Runs directly in your browser with a simple setup; no installation or coding required.

- Reliable Data Collection: Avoids blocks with a built-in IP management system, ensuring complete and uninterrupted scraping.

- Automation Integration: Connects with Make.com and n8n to schedule runs and auto-save data to Google Sheets.

- Flexible Data Export: Download collected data in standard, analysis-ready formats like CSV and JSON.

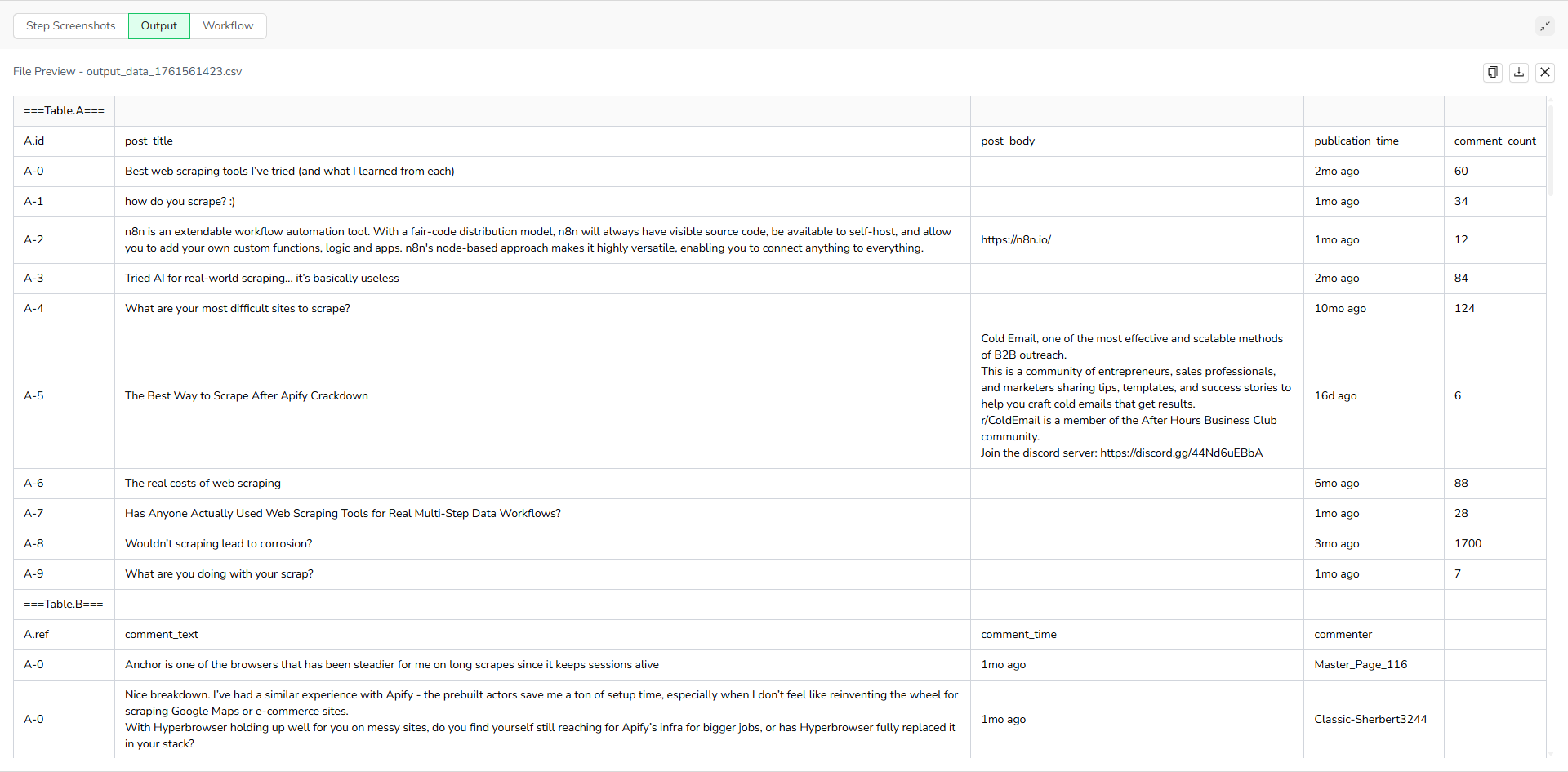

What Data Can I Extract from Reddit?

With BrowserAct's Reddit Scraper, you can pull a wide range of publicly available data for analysis. Here's a breakdown:

Reddit Posts

- Post titles

- Body text

- Publication times

- Comment counts

Reddit Comments

- Individual comment threads

- Commenter details

- Timestamps

How to Scrape Reddit

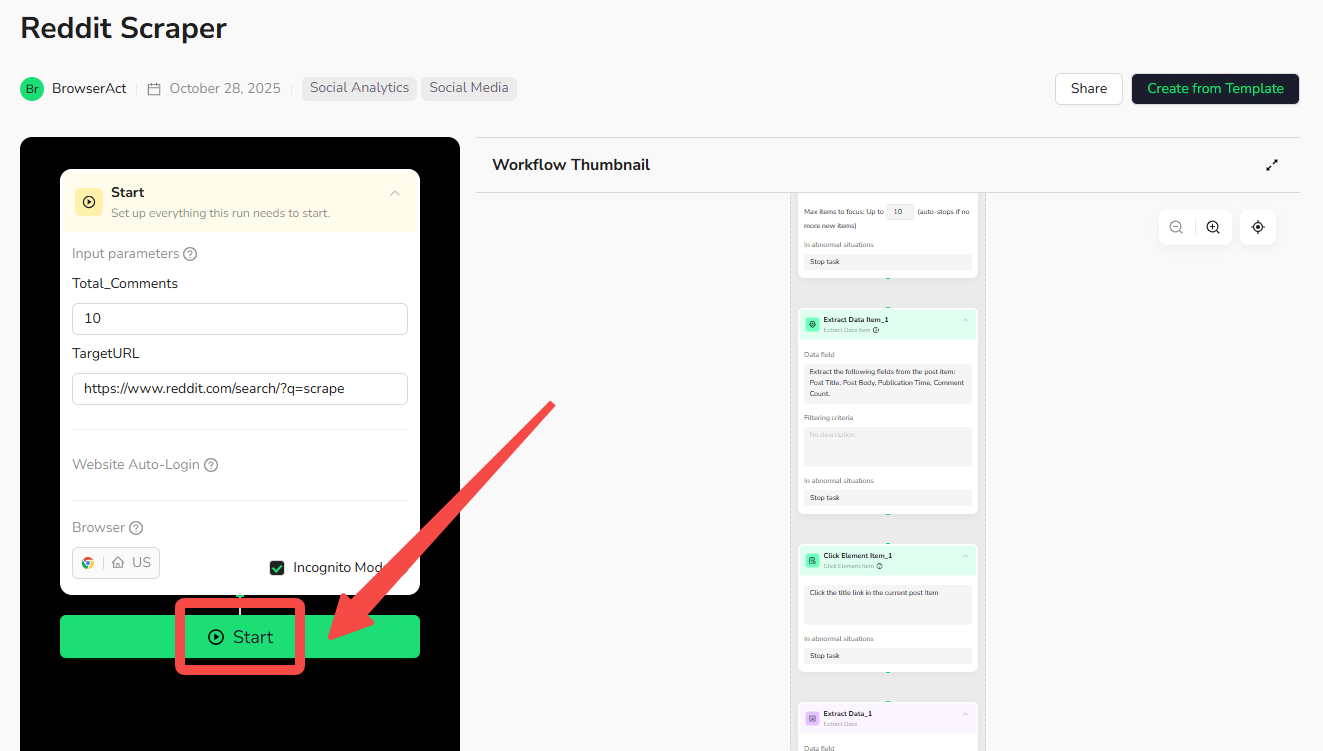

Quick Start Guide: How to Use Reddit Scraper in One Click

If you want to quickly start experiencing scraping Reddit, simply use our pre-built "Reddit Scraper" template for instant setup and start scraping Reddit effortlessly.

- Register Account: Create a free BrowserAct account using your email.

- Configure Parameters: Fill in necessary inputs like TargetURL (e.g., "https://www.reddit.com/search/?q=scraper") and Total_Comments (e.g., 10) – or use defaults to learn how to scrape Reddit quickly.

- Start Execution: Click "Start" to run the workflow.

- Download Data: Once complete, download the results file from Reddit scraping.

Why Scrape Reddit?

Scraping Reddit allows you to systematically collect and analyze public data from its communities. This process is valuable for gathering specific information that can be used for business, research, and analysis. Here are the primary reasons to scrape Reddit:

- Gather Public Opinion: Extract comments and posts to understand public sentiment on specific topics, products, or brands. This data provides direct feedback from users.

- Conduct Market Research: Collect conversations from niche communities (subreddits) to identify consumer needs, pain points, and preferences within a target demographic.

- Track Trends and Topics: Monitor discussions in real-time to detect emerging trends, popular subjects, and shifts in conversation before they become mainstream.

- Monitor Brand Mentions: Systematically find and log every mention of your brand, products, or competitors to manage online reputation and analyze feedback.

- Build Datasets for Analysis: Create structured datasets from Reddit's public text data for use in machine learning, academic research, or detailed statistical analysis.

Scraping automates the data collection process, enabling you to efficiently gather large volumes of information from specific subreddits or search queries for structured analysis.

How to Build a Reddit Scraper Workflow: Step by Step

Reddit Scraper workflow building with BrowserAct requires no coding skills—it's automation-ready and easy to set up. Follow these step-by-step instructions to get started.

1. Determine Your Scope

Decide the number of posts and comments to extract (e.g., 10 posts with the first 10 comments each). Adjust parameters like Total_Comments for flexibility.

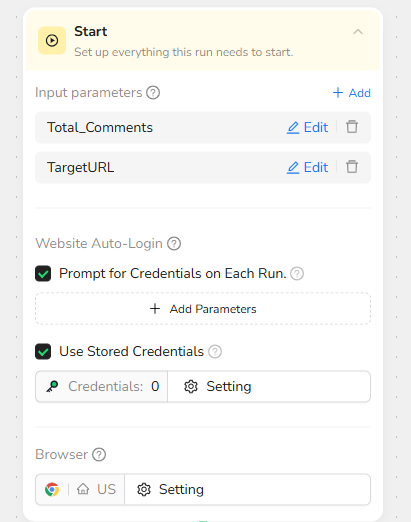

2. Start Node Parameter Settings

- Total_Comments: Set the number of comments per post (e.g., 10). Delete this for unlimited extraction.

- TargetURL: Enter your Reddit link (e.g., https://www.reddit.com/search/?q=scraper).

Note: Customize based on needs—works with subreddits, searches, or trending pages.a

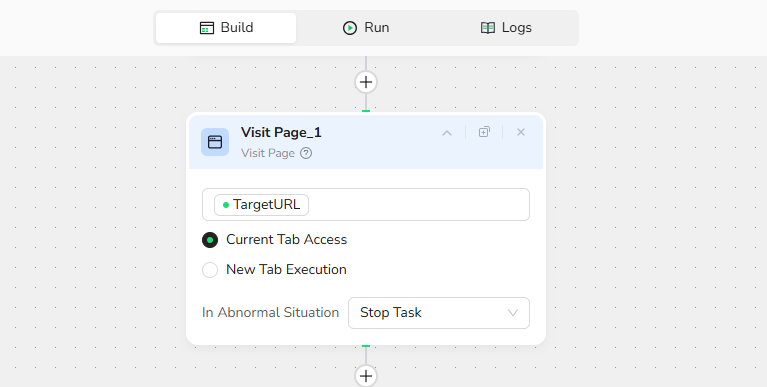

3. Visit Page

In the prompt box, enter "/targeturl" – this will navigate to the target URL.

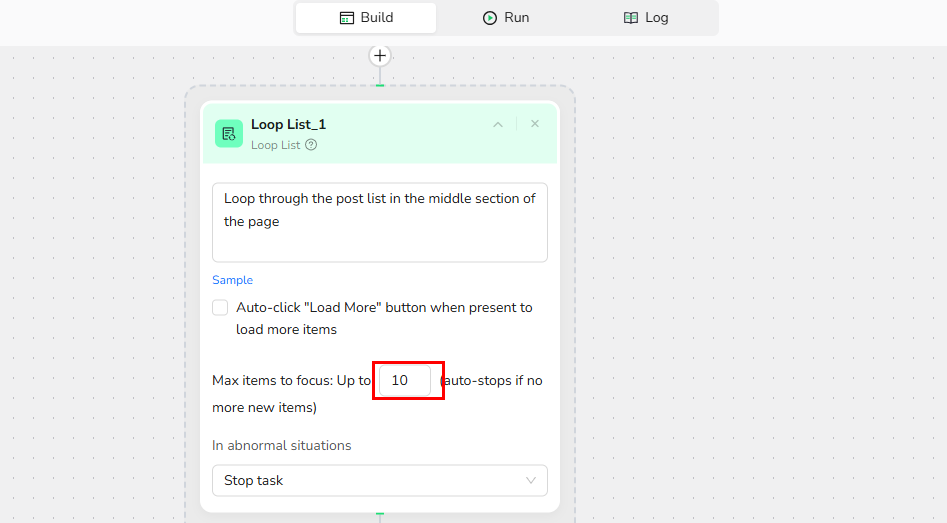

4. Add Loop List Node

Loop List Prompt: "Loop through the post list in the middle section of the page". This node specifies the location for looping through the list.

- Max Focused Loop Items: Set to your desired post count (e.g., 10 for 10 posts).

Note: The number of focused items here represents the number of posts you want to extract. For example, if you want to extract 20 posts, adjust this value to 20.

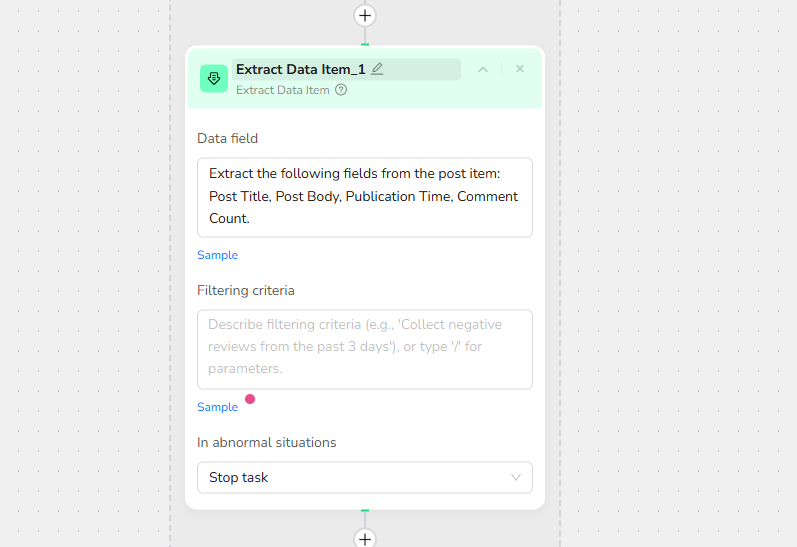

5. Add Extract Data Item

In the prompt box, enter "Extract the following fields from the post item: Post Title, Post Body, Publication Time, Comment Count."

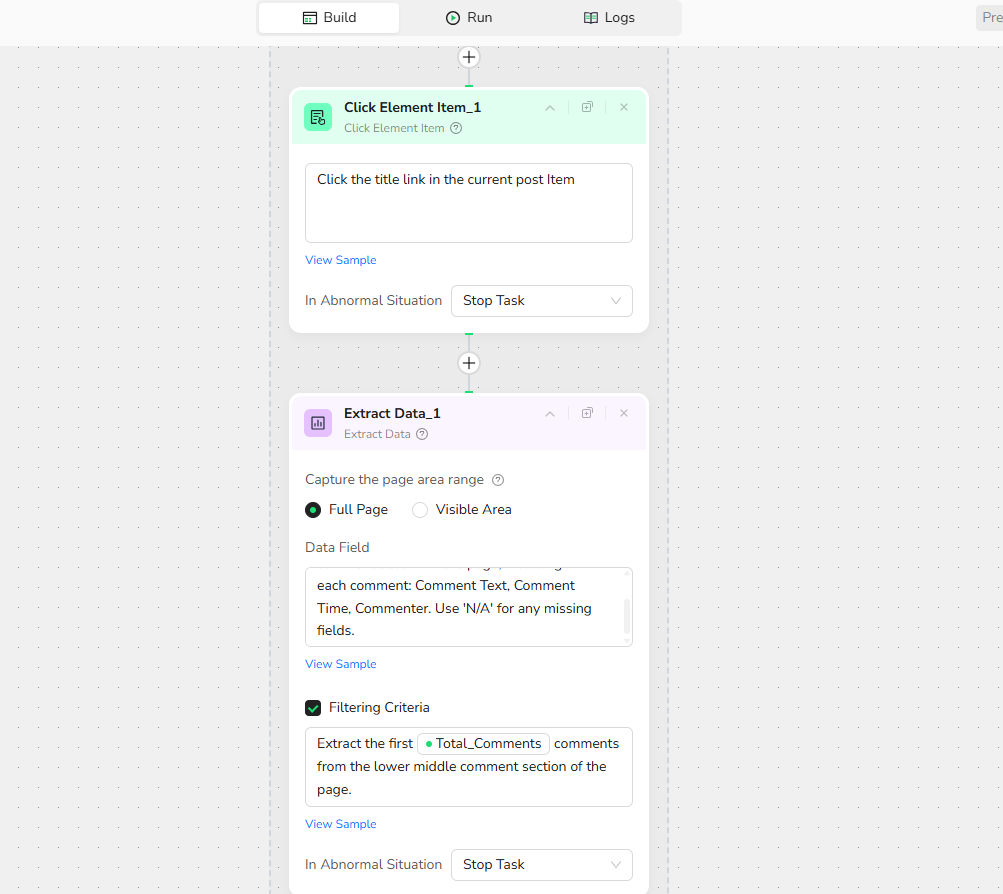

6. Add Click Element Item

In the prompt box, enter "Click the title link in the current post Item".

7. Add Extract Data

- In the Data Field prompt box, enter "Extract comments from the lower middle comment section of the page, including for each comment: Comment Text, Comment Time, Commenter. Use 'N/A' for any missing fields." Note: You can specify the exact location of the data to be collected on the page to increase the accuracy of data extraction.

- In the Filtering Criteria prompt box, enter "Extract the first /Total_Comments comments from the lower middle comment section of the page."

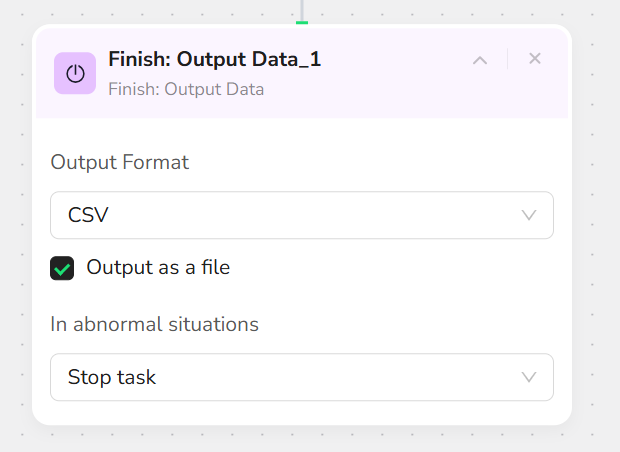

8. Output Data

Export in JSON, CSV, XML, or Markdown (MD) formats.

Who Can Use Reddit Scraper?

Reddit Scraper is designed for anyone needing quick, reliable access to Reddit data. It's ideal for a variety of users, including:

- Market Researchers and Analysts: Extract discussions for consumer behavior studies, trend analysis, and sentiment tracking.

- Brand Managers and Marketers: Monitor feedback, track brand mentions, and identify PR opportunities across subreddits.

- Product Developers and Teams: Gather user insights on pain points and features to inform product roadmaps.

- Content Creators and Journalists: Collect opinions, quotes, and trends for articles, reports, or social content.

- Data Scientists and Developers: Build datasets for machine learning, APIs, or custom integrations with tools like Make.com.

- Small Businesses and Startups: Conduct affordable competitive research without needing advanced technical skills.

- Academics and Students: Research community opinions on topics like politics, technology, or social issues.

No matter your background, if you're looking to scrape Reddit without hassle, this tool is accessible and effective for both individuals and teams.

Is It Legal to Scrape Reddit?

Yes, scraping publicly available data from Reddit is generally considered legal, provided it is done ethically and respects the platform's rules. This means only accessing information that is visible to any public visitor (like posts and comments), never attempting to access private data, and adhering to Reddit's Terms of Service to avoid overwhelming their servers with excessive requests. The way you use the data is also critical; it should not be used to violate copyright or privacy laws like GDPR or CCPA. Our Reddit Scraper is designed to operate within these responsible guidelines, focusing on ethical data extraction. Please note that this information does not constitute legal advice, and we recommend consulting with a legal professional to ensure full compliance for your specific project.

How Many Results Can You Scrape?

Our Reddit Scraper offers complete flexibility, giving you full control over the scale of your data extraction. By default, it is set to capture the top 10 posts and the first 10 comments under each post, which is ideal for quick sampling and testing. However, these numbers are fully customizable in the settings, allowing you to scrape hundreds or even thousands of posts and comments. Ultimately, there are no hard limits—the tool is built to scale with your needs.

Just keep in mind that scraping a larger volume of data will naturally require more time to run and will consume more of your platform credits.

Make.com Integration

BrowserAct's Reddit Scraper is now available as a native app on Make.com—add it to your scenarios without API hassle.

- Automation-Ready: Integrate with Make, n8n, or others for scheduled monitoring.

- Rate Limit Handling: Built-in delays to comply with Reddit policies.

- Multi-Topic Tracking: Run instances for different keywords/subreddits.

💡 Use Case Tip: Ideal for discussion-level analysis, sentiment tracking, and feedback research with post context and top comments.

🚀 Quick Start with Make.com: Search for "BrowserAct" in Make.com's app directory and add it directly—no complex setup.

Need help? Contact us at

- 📧 Discord: [Discord Community]

- 💬 E-mail: service@browseract.com