What Is Web Scraping and How to Do It Safely

Learn what web scraping is and how to do web scraping safely using tools like BrowserAct. Discover ways to avoid blocks, handle data, and scale with A

Web scraping is a smart way of collecting information from websites. You likely don't even know it, but web scraping occurs everywhere—store websites, job search websites, and travel apps. In this article, we will discuss what web scraping is, how to scrape without getting blocked, and how to utilize software such as BrowserAct to automate your tasks so that you can make your job easier and faster.

What Is Web Scraping

Web scraping is the practice of using automated tools to collect data from websites. But beyond the definition, it’s a vital part of how many businesses stay competitive, react quickly to the market, and make data-backed decisions — especially when there’s no public API available.

Let’s look at how it works in real-world scenarios:

- E-commerce pricing war:

Imagine you're running a Shopify-based electronics store. Your main competitor on Amazon changes their prices daily. Instead of checking manually, your scraper automatically checks and records their prices every 2 hours. This lets you react faster with dynamic pricing strategies or bundle offers — without overcutting your margins. - Job aggregator platforms:

Sites like Indeed or Glassdoor can’t rely on companies submitting listings. They scrape thousands of career pages from firms like Microsoft, Tesla, or small startups. That’s how job seekers can search one place and see most available jobs, even if those jobs were never manually posted to these platforms. - Real estate market analysis:

For investors or property listing platforms, scraping data from sites like Zillow, Redfin, or Realtor helps identify pricing trends, average time on market, or what features (e.g., home office, solar panels) are becoming more common in hot zip codes. - Travel deals and fare comparison:

Think of apps like Skyscanner or Hopper. They scan hundreds of airline and hotel sites, scraping flight times, prices, and availability. Without scraping, users would have to manually check each airline’s site one by one. - B2B lead generation:

A sales team wants to target all Shopify stores that launched in the last 6 months. A scraper can scan store directories, collect emails, domain names, and contact pages — feeding directly into the CRM for outreach campaigns.

In short, scraping isn’t just about copying text from a website — it’s about turning the open web into a real-time, structured data source for your specific business strategy.

How to Avoid Being Blocked While Scraping

When scraping, websites may try to block your actions. That’s because too many requests from your scraper can look like a cyberattack. Here are some easy ways to reduce the chance of being blocked:

Rotate User Agents

All browsers have a "user agent" string that tells websites what kind of device is visiting. If you keep using the same, there's a good chance the site will pick up on it. Switch through several user agents so you're not traced.

Use Proxies

Proxies hide your real IP address. With most proxies, your requests look like they're originating from different locations. This causes your scrap to resemble multiple users instead of one.

Limit Request Speed

Be too quick in scraping, and you risk getting detected as a bot. Reduce the speed of the requests. Place random pauses between them so that they appear as if they are being initiated by a human.

Bypass Captchas

Some sites use CAPTCHAs to avoid bots. You can bypass or solve them using tools like 2Captcha or AI models if necessary.

These tricks make your scraper go unnoticed and avoid websites blocking your access.

How to Deal with Dynamic Content

Most websites these days don't show all of their content on the same page. They load pieces of the page once you've opened it. That's dynamic content.

JavaScript is the system that controls dynamic content. It tells the site to load more content when you're scrolling down or when you press a button.

Here's what you can do about it:

Use a Headless Browser

A headless browser behaves exactly like a normal web browser except it has no screen. It simply executes in the background and enables you to load JavaScript-based content. You can use Puppeteer or Playwright for it.

Inspect API Requests

Sometimes dynamic content is delivered by a hidden API. Simply find and copy the API link using the browser developer tools. Then you can directly fetch the same information using the link.

Use Browser Automation Tools

You can make buttons click, pages scroll, and so much more—just like a human. This makes it easier to access slowly loaded or hidden data.

Handling dynamic content is important if you desire full and actual results from your scraping.

How To Use BrowserAct For Quick Web Scraping

BrowserAct is an easy-to-use web scraping tool that helps you with fast and smart web scraping. If you are new to scraping or need something heavy-duty, BrowserAct can help you fetch the information you need with less effort.

Let's proceed with the setup of BrowserAct:

Step 1: Sign Up

Start by visiting the BrowserAct website and registering for an account. You can sign up with your email, Google, or GitHub account—whichever suits you best.

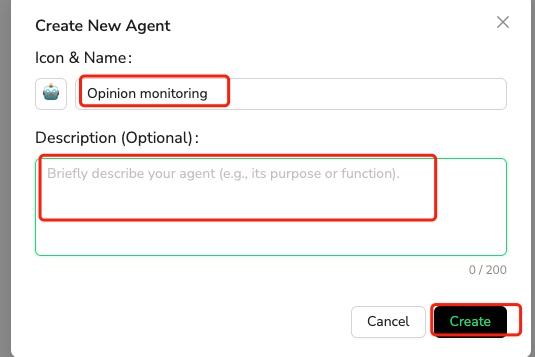

Step 2: Set up Your First Agent

When you're signed in, choose the "+Create" button to set up your first agent. An "agent" is really just a smart helper who does the web scraping for you.

During setup of your agent, you'll:

- Choose a name and icon for your agent

- Provide a short description (what the agent should do)

- Choose a language model and set up your IP address preferences

This keeps everything organized and ready to go for different kinds of scraping jobs.

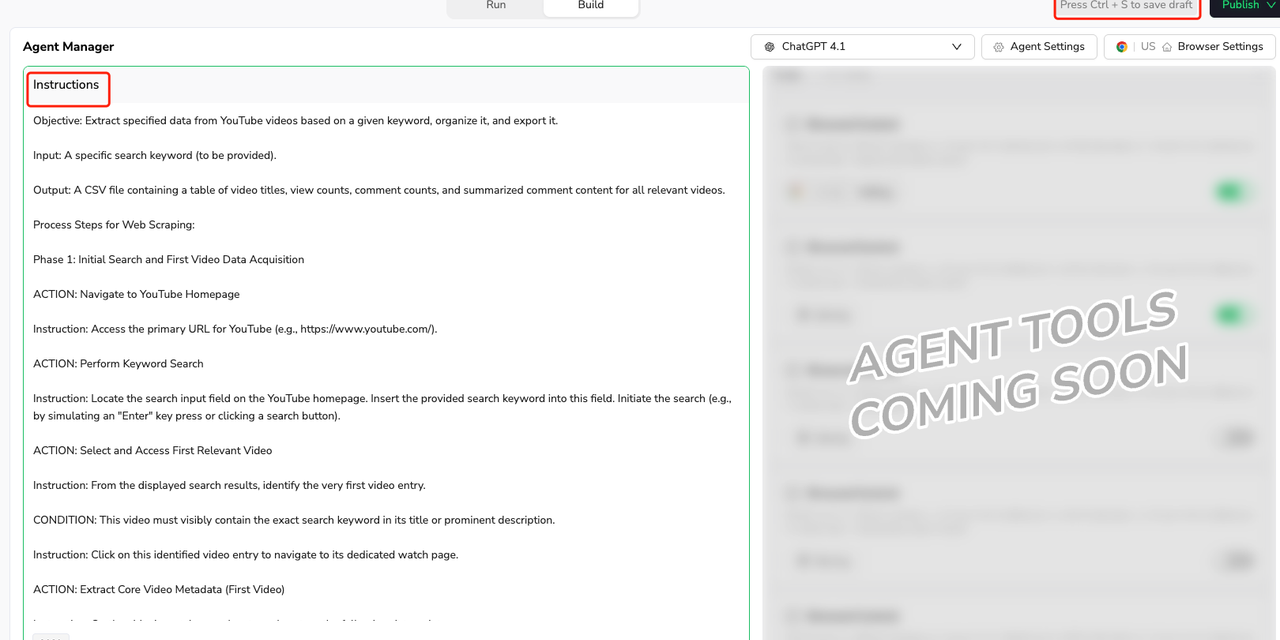

Step 3: Give Precise Directions

The single most important setup factor in preparing your agent is giving it precise, step-by-step instructions. Just think about telling someone how to fetch the information on your behalf.

For example, if you want to fetch YouTube reviews for a brand:

- Tell the agent what to search

- Inform it what parts of the page to collect (e.g., video titles, upload time, comments)

- Inform it what not to collect (e.g., adverts or irrelevant results)

- Inform it the output format (CSV, Excel, JSON)

Do not worry if you remember something later. You can always include more instructions later when running the task.

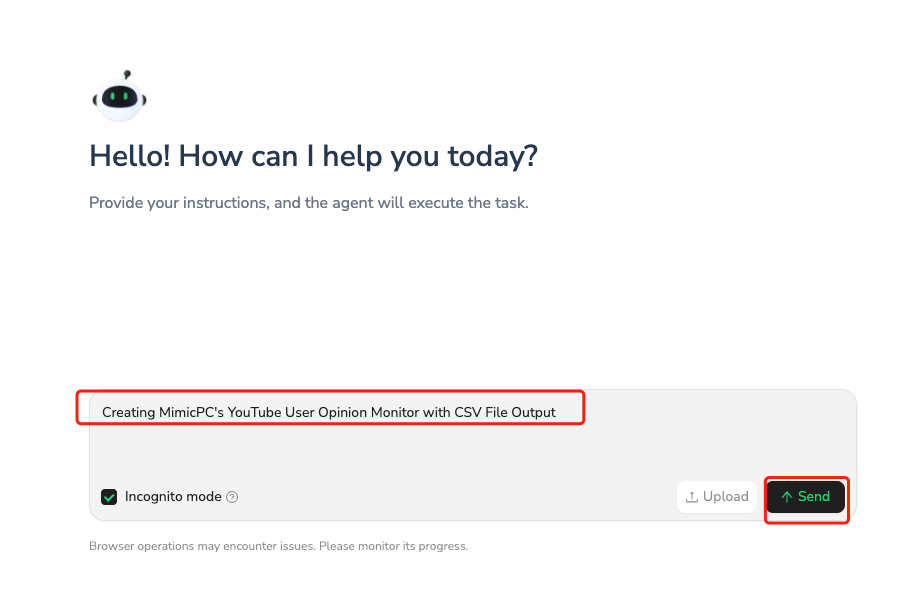

Step 4: Run the Agent and Watch It Work

Once your agent is ready, click “Run”. You’ll enter task-specific instructions in the task interface and click “Send” to begin.

You can:

- Watch your agent work in real time

- Step in at any time to take control if needed

- Enter keywords, log in to accounts, or solve captchas manually

This mix of automation and manual control helps make web scraping more flexible and accurate.

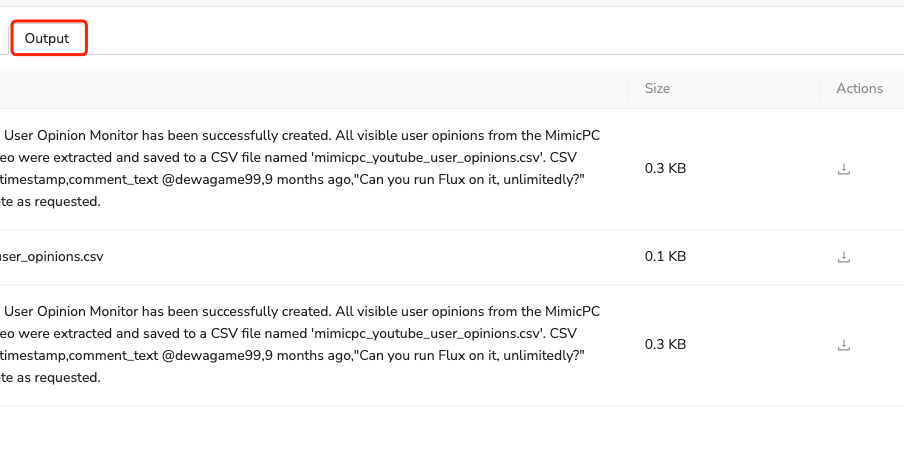

Step 5: Review the Results

Once your agent finishes the task:

- You'll see how many credits were used

- You can save scraped data to your computer

- You'll see the completed task in the Output area

If the outcome isn't good enough, you can change your instructions and repeat the task—no starting over.

Step 6: Manage Credits and Sessions

BrowserAct is a credit-based model. The longer or more complex the task, the more credits. If you run out of credits mid-task, it will end. Please be sure to:

- Set quality instructions in order not to waste credits and time

- Check your Session History to see previous tasks

- Check what worked and what did not work

BrowserAct aims to make your scraping as efficient as possible while you keep going. The better your instructions, the better your result.

Frequently Asked Questions

Q1: Is web scraping legal?

Web scraping is legal if you follow rules. Always look at a site's terms of use. Never copy personal or copyrighted stuff. Utilize public information for safe usage.

Q2: Do I need to know how to code?

Not necessarily. Programs such as BrowserAct enable you to scrape without coding much. But knowing basic programming is helpful when things get complex.

Q3: Can scraping harm a website?

If you do too many requests too quickly, you will be able to slow down or even take down a site. Scrape always at a slow pace and respect the site's limits.

Q4: What are the dangers of scraping?

Dangers are getting blocked, legal issues, or creating bad data. Using smart tools and best practices minimizes these dangers.

Conclusion

Web scraping is a great way to obtain data online in a timely and efficient manner. With the appropriate tools and best practice, you are able to get around blocks, deal with dynamic pages, and amplify efforts using AI. Tools like BrowserAct make it simple and fast, even for beginners.

Whether you're price comparing, tracking job ads, or obtaining real estate listings, web scraping will save you time and help your business grow. Just remember to do it ethically and legally.

Relative Resources

How to Find Best Selling Products on Amazon in 2025

How to Scrape Google News via No-Code News Scraper

Why Use a Reddit Scraper? 12 Reasons for Market Intelligence

How to Find Leads on Yellow Pages For Your Business with BrowserAct

Latest Resources

10 Killer AI Agent Skills That Are Dominating GitHub Now

How One Person Made $100K in 3 Days Selling OpenClaw Setups

Amazon Price Scraper: Monitor Competitor Pricing in Real-Time | No Coding Required